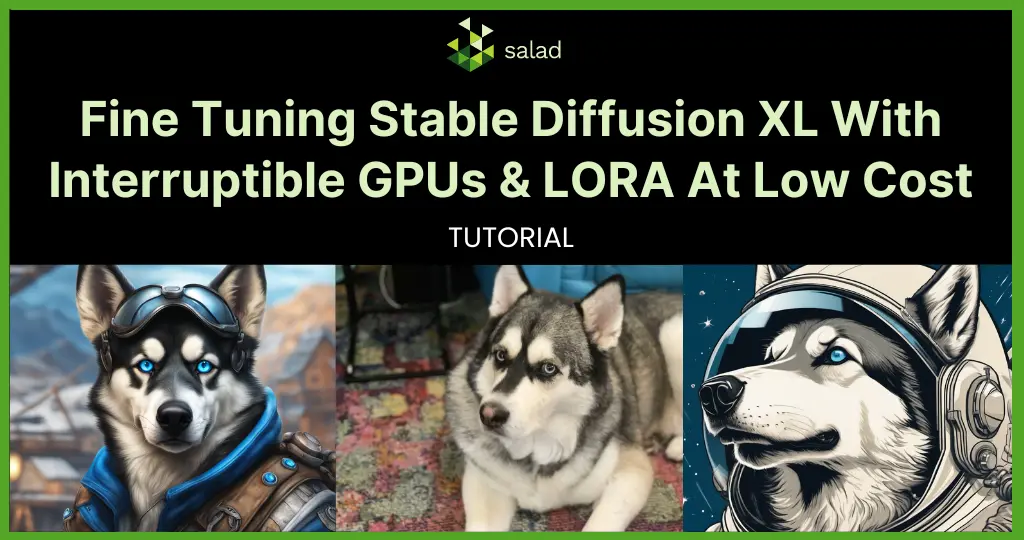

Fine tuning Stable Diffusion XL (SDXL) with interruptible GPUs and LoRA for low cost

It’s no secret that training image generation models like Stable Diffusion XL (SDXL) doesn’t come cheaply. The original Stable Diffusion model cost $600,000 USD to train using hundreds of enterprise-grade A100 GPUs for more than 100,000 combined hours. Fast forward to today, and techniques like Parameter-Efficient Fine Tuning (PEFT) and Low-Rank Adaptation (LoRA) allow us to fine tune state-of-the-art image generation models like Stable Diffusion XL in minutes on a single consumer GPU. Using spot instances or community clouds like Salad reduces the cost even further. In this tutorial, we fine tune SDXL on a custom image of Timber, my playful Siberian Husky. Benefits of spot instances to fine tune SDXL Spot instances allow cloud providers to sell unused capacity at lower prices, usually in an auction format where users bid on unused capacity. Salad’s community cloud comprises of tens of thousands of idle residential gaming PCs around the world. On AWS, using an Nvidia A10G GPU with the g5.xlarge instance type costs $1.006/hr for on-demand pricing, but as low as $0.5389/hr for “spot” pricing. On Salad, an RTX 4090 (24GB) GPU with 8 vCPUs and 16GB of RAM costs only $0.348/hr. In our tests, we were able to train a LoRA for Stable Diffusion XL in 13 minutes on an RTX 4090, at a cost of just $0.0754. These low costs open the door for increasingly customized and sophisticated AI image generation applications. Challenges of fine tuning Stable Diffusion XL with spot instances There is one major catch, though: both spot instances and community cloud instances have the potential to be interrupted without warning, potentially wasting expensive training time. Additionally, both are subject to supply constraints. If AWS is able to sell all of their GPU instances at on-demand pricing, there will be no spot instances available. Since Salad’s network is residential, and owned by individuals around the world, instances come on and offline throughout the day as people use their PCs. But with a few extra steps, we can take advantage of the huge cost savings of using interruptible GPUs for fine tuning. Solutions to mitigate the impact of interrupted nodes The #1 thing you can do to mitigate the impact of interrupted nodes is to periodically save checkpoints of the training progress to cloud storage, like Cloudflare R2 or AWS S3. This ensures that your training job can pick up where it left off in the event it gets terminated prematurely. This periodic checkpointing functionality is often offered out-of-the-box by frameworks such as 🤗 Accelerate, and simply needs to be enabled via launch arguments. For example, using the Dreambooth LoRA SDXL script with accelerate, as we did, you might end up with arguments like this: This indicates that we want to train for 500 steps, and save checkpoints every 50 steps. This ensures that at most, we lose 49 steps of progress if a node gets interrupted. On an RTX 4090, that amounts to about 73 seconds of lost work. You may want to checkpoint more or less frequently than this, depending on how often your nodes get interrupted, storage costs, and other factors. Once you’ve enabled checkpointing with these launch arguments, you need another process monitoring for the creation of these checkpoints, and automatically syncing them to your preferred cloud storage. We’ve provided an example python script that does this by launching accelerate in one thread, and using another thread to monitor the filesystem with watchdog, and push files to S3-compatible storage using boto3. In our case, we used R2 instead of S3, because R2 does not charge egress fees. Other considerations for SDXL fine tuning The biggest callout here is to automate clean up of your old checkpoints from storage. Our example script saves a checkpoint every 10% progress, each of which is 66MB compressed. Even though the final LoRA we end up with is only 23MB, the total storage used during the process is 683MB. It’s easy to see how storage costs could get out of hand if this was neglected for long enough. Our example script fires a webhook at each checkpoint, and another at completion. We set up a Cloudflare Worker to receive these webhooks and clean up resources as needed. Additionally, while the open source tools are powerful and relatively easy to use, they are still quite complex and the documentation is often very minimal. I relied on youtube videos and reading the code to figure out the various options for the SDXL LoRA training script. However, these open source projects are improving at an increasingly quick pace, as they see wider and wider adoption, so the documentation will likely improve. At the time of writing, the 🤗Diffusers library had merged 47 pull requests from 26 authors, just in the last 7 days. Conclusions Modern training techniques and interruptible hardware combine to offer extremely cost effective fine tuning of Stable Diffusion XL. Open source training frameworks make the process approachable, although documentation could be improved. You can train a model of yourself, your pets, or any other subject in just a few minutes, at a cost of pennies. Training costs have plummeted over the last year, thanks in large part to the rapidly expanding open source AI community. The range of hardware capable of running these training tasks has greatly expanded as well. Many recent consumer GPUs are capable of training an SDXL LoRA model in well under an hour, with the fastest taking just over 10 minutes. Shawn RushefskyShawn Rushefsky is a passionate technologist and systems thinker with deep experience across a number of stacks. As Generative AI Solutions Architect at Salad, Shawn designs resilient and scalable generative ai systems to run on our distributed GPU cloud. He is also the founder of Dreamup.ai, an AI image generation tool that donates 30% of its proceeds to artists.

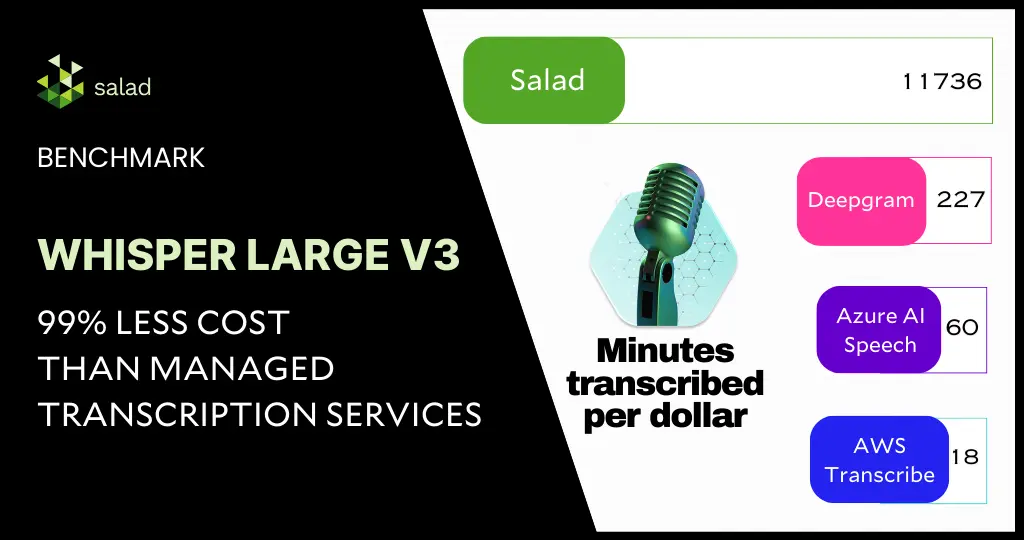

Whisper Large V3 Speech Recognition Benchmark: 1 Million hours of audio transcription for just $5110

Save over 99.8% on audio transcription using Whisper Large V3 and consumer GPUs A 99.8% cost-savings for automatic speech recognition sounds unreal. But with the right choice of GPUs and models, this is very much possible and highlights the needless overspending on managed transcription services today. In this deep dive, we will benchmark the latest Whisper Large V3 model from Open AI for inference against the extensive English CommonVoice and Spoken Wikipedia Corpus English (Part1, Part2) datasets, delving into how we accomplished an exceptional 99.8% cost reduction compared to other public cloud providers. Building upon the inference benchmark of Whisper Large V2 and with our continued effort to enhance the system architecture and implementation for batch jobs, we have achieved substantial reductions in both audio transcription costs and time while maintaining the same level of accuracy as the managed transcription services. Behind The Scenes: Advanced System Architecture for Batch Jobs Our batch processing framework comprises of the following: We aimed to keep the framework components fully managed and serverless to closely simulate the experience of using managed transcription services. A decoupled architecture provides the flexibility to choose the best and most cost-effective solution for each component from the industry. Within each node in the GPU resource pool in SaladCloud, two processes are utilized following best practices: one dedicated to GPU inference and another focused on I/O and CPU-bound tasks, such as downloading/uploading, preprocessing, and post-processing. 1) Inference Process The inference process operates on a single thread. It begins by loading the Whisper Large V3 model, warming up the GPU, and then listens on a TCP port by running a Python/FastAPI app in a Unicorn server. Upon receiving a request, it calls the transcription inference and returns the generated assets. The chunking algorithm is configured for batch processing, where long audio files are segmented into 30-second clips, and these clips are simultaneously fed into the model. The batch inference significantly enhances performance by effectively leveraging the GPU cache and parallel processing capabilities. 2) Benchmark Worker Process The benchmark worker process primarily handles various I/O tasks, as well as pre- and post processing. Multiple threads are concurrently performing various tasks: one thread pulls jobs and downloads audio clips; another thread calls the inference, while the remaining threads manage tasks such as uploading generated assets, reporting job results and cleaning the environment, etc. Several queues are created to facilitate information exchange among these threads. Running two processes to segregate GPU-bound tasks from I/O and CPU-bound tasks, and fetching the next audio clips earlier while the current one is still being transcribed, allows us to eliminate any waiting period. After one audio clip is completed, the next is immediately ready for transcription. This approach not only reduces the overall processing time for batch jobs but also leads to even more significant cost savings. Deployment on SaladCloud We created a container group with 100 replicas (2 vCPU and 12 GB RAM with 20 different GPU types) in SaladCloud, and ran it for approximately 10 hours. In this period, we successfully transcribed over 2 million audio files, totalling nearly 8000 hours in length. The test incurred around $100 in SaladCloud costs and less than $10 on both AWS and Cloudflare. Results from the Whisper Large v3 benchmark Among the 20 GPU types, based on the current datasets, the RTX 3060 stands out as the most cost-effective GPU type for long audio files exceeding 30 seconds. Priced at $0.10 per hour on SaladCloud, it can transcribe nearly 200 hours of audio per dollar. For short audio files lasting less than 30 seconds, several GPU types exhibit similar performance, transcribing approximately 47 hours of audio per dollar. On the other hand, the RTX 4080 outperforms others as the best-performing GPU type for long audio files exceeding 30 seconds, boasting an average real-time factor of 40. This implies that the system can transcribe 40 seconds of audio per second. While for short audio files lasting less than 30 seconds, the best average real-time factor is approximately 8 by a couple of GPU types, indicating the ability to transcribe 8 seconds of audio in just 1 second. Analysis of the benchmark results Different from those obtained in local tests with several machines in a LAN, all these numbers are achieved in a global and distributed cloud environment that provides the transcription at a large scale, including the entire process from receiving requests to transcribing and sending the responses. There are various methods to optimize the results. Aiming for reduced costs, improved performance or even both, and different approaches may yield distinct outcomes. The Whisper models come in five configurations of varying model sizes: tiny, base, small, medium, and large(v1/v2/v3). The large versions are multilingual and offer better accuracy, but they demand more powerful GPUs and run relatively slowly. On the other hand, the smaller versions support only English with slightly lower accuracy, but it requires less powerful GPUs and runs very fast. Choosing more cost-effective GPU types in the resource pool will result in additional cost savings. If performance is the priority, selecting higher-performing GPU types is advisable, while still remaining significantly less expensive than managed transcription services. Additionally, audio length plays a crucial role in both performance and cost, and it’s essential to optimize the resource configuration based on your specific use cases and business goals. Discover our open-source code for a deeper dive: Implementation of Inference and Benchmark Worker Docker Images Data Exploration Tool Performance Comparison across Different Clouds The results indicate that AI transcription companies are massively overpaying for cloud today. With the open-source automatic speech recognition model – Whisper Large V3, and the advanced batch-processing architecture leveraging hundreds of consumer GPUs on SaladCloud, we can deliver transcription services at a massive scale and at an exceptionally low cost, while maintaining the same level of accuracy as managed transcription services. With the most cost-effective GPU type for Whisper Large V3 inference on SaladCloud, $1 dollar can transcribe 11,736 minutes of audio (nearly 200 hours), showcasing a 500-fold

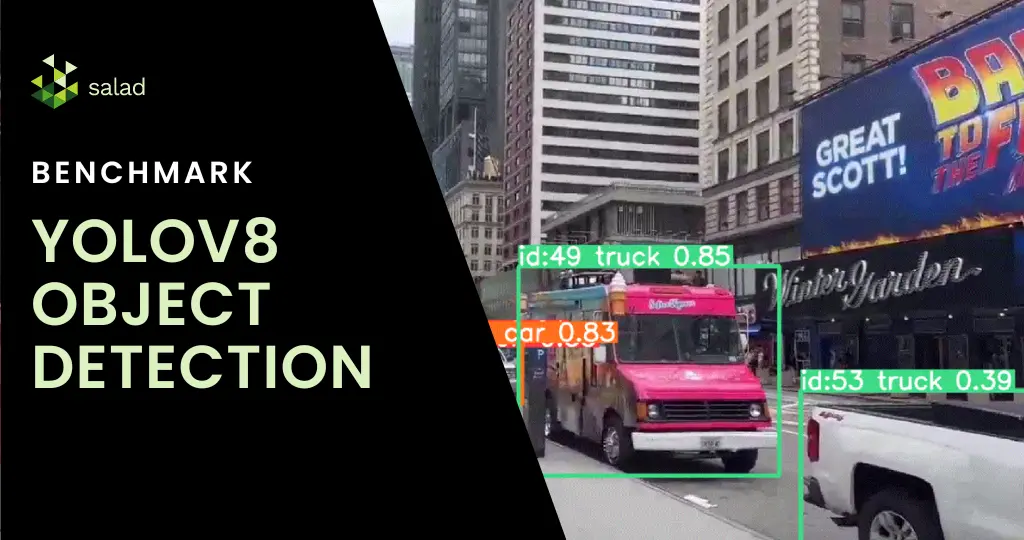

YOLOv8 Benchmark: Object Detection on Salad’s GPUs (73% Cheaper Than Azure)

What is YOLOv8? In the fast-evolving world of AI, object detection has made remarkable strides, epitomized by YOLOv8. YOLO (You Only Look Once) is an object detection and image segmentation model launched in 2015 and YOLOv8s is the latest version, developed by Ultralytics. The algorithm is not just about recognizing objects; it’s about doing so in real-time with unparalleled precision and speed. From monitoring fast-paced sports events to overseeing production lines, YOLOv8 is transforming how we see and interact with moving images. With features like spatial attention, feature fusion and context aggregation modules, YOLOv8 is being used extensively in agriculture, healthcare, manufacturing among others. In this YOLOv8 benchmark, we compare the cost of running YOLO on Salad and Azure. Running object detection on SaladCloud’s GPUs: A fantastic combination YOLOv8 can be run on GPUs, as long as they have enough memory and support CUDA. But with the GPU shortage and high cost, you need GPUs rented at affordable prices to make the economics work. SaladCloud’s network of 10,000+ Nvidia consumer GPUs has the lowest prices in the market and are a perfect fit for YOLOv8. Deploying YOLOv8 on SaladCloud democratizes high-end object detection, offering it on a scalable, cost-effective cloud platform for mainstream use. With GPUs starting at $0.02/hour, Salad offers businesses and developers an affordable, scalable solution for sophisticated object detection at scale. A deep dive into live stream video analysis with YOLOv8 This benchmark harnesses YOLOv8 to analyze not only pre-recorded but also live video streams. The process begins by capturing a live stream link, followed by real-time object detection and tracking. Using GPU’s on Saladcloud, we can process each video frame in less then 10 milliseconds, which is 10 times faster then using a CPU. Each frame’s data is meticulously compiled, yielding a detailed dataset that provides timestamps, classifications, and other critical metadata. As a result we get a nice summary of all the objects being present on our video: How to run YOLOv8 on SaladCloud’s GPUs We introduced a FastAPI with a dual role: it processes video streams in real-time and offers interactive documentation via Swagger UI. You can process live streams from Youtube, RTSP, RTMP, TCP as well as a regular videos. All the results will be saved in an Azure storage account you specify. All you need to do is send an API call with the video link, check if the video is a live stream or not, storage account information and timeframes of how often you want to save the results. We also integrated multithreading capabilities, allowing multiple video streams to be processed simultaneously. Deploying on SaladCloud In our step by step guide, you can go through the full deployment journey on Salad Cloud. We configured container groups, set up efficient networking, and ensured secure access. Deploying the FastAPI application on Salad proved to be not just technically feasible but also cost effective, highlighting the platform’s efficiency. Price comparison: Processing live streams and videos on Azure and Salad When it comes to deploying object detection models, especially for tasks like processing live streams and videos, understanding the cost implications of different cloud services is crucial. Let’s do some price comparison for our live stream object detection project: Context and Considerations Live Stream Processing: Live streams are unique in that they can only be processed as the data is received. Even with the best GPUs, the processing is limited to the current feed rate. Azure’s Real-Time Endpoint: We assume the use of an ML Studio real-time endpoint in Azure for a fair comparison. This setup aligns with a synchronous process that doesn’t require a full dedicated VM. Azure Pricing Overview We will now compare the compute prices in Azure and Salad. Note that in Azure you can not pick RAM, vCpu and GPU memory separately. You can only pick preconfigured computes. With Salad, you can pick exactly what you need. Lowest GPU Compute in Azure: For our price comparison, we’ll start by looking at Azure’s lowest GPU compute price, keeping in mind the closest model to our solution is YOLOv5. 1. Processing a Live Stream Service Configuration Cost per hour Remarks Azure 4 core, 16GB RAM (No GPU) $0.19 General purpose compute, no dedicated GPU Salad 4 vCores, 16GB RAM $0.032 Equivalent to Azure’s general compute Percentage Cost Difference for General Compute Salad is approximately 83% cheaper than Azure for general compute configurations. 2. Processing with GPU Support. This is the GPU Azure recommends for yolov5. Service Configuration Cost per hour Remarks Azure NC16as_T4_v3 (16 vCPU, 110GB RAM, 1 GPU) $1.20 Recommended for YOLOv5 Salad Equivalent GPU Configuration $0.326 Salad’s equivalent GPU offering Percentage Cost Difference for GPU Compute Salad is approximately 73% cheaper than Azure for similar GPU configurations. YOLOv8 deployment on GPUs in just a few clicks You can deploy YOLOv8 in production on SaladCloud’s GPUs in just a few clicks. Simply download the code from our GitHub repository or pull our ready-to-deploy Docker container from the Salad Portal. It’s as straightforward as it sounds – download, deploy, and you’re on your way to exploring the capabilities of YOLOv8 in real-world scenarios. Check out SaladCloud documentation for quick guides on how to start using our batch or synchronous solutions. Check out our step-by-step guide To get a comprehensive step-by-step guide of how to deploy YOLOv8 on SaladCloud, check out our step-by-step guide here. In this guide, we will show: This process is fully customizable to your needs. Follow along, make modifications, and experiment to your heart’s content. Our guide is designed to be flexible, allowing you to adjust and enhance the deployment of YOLOv8 according to your project requirements or curiosity. We are excited about the potential enhancements and extensions of this project. Future considerations include broadening cloud integrations, delving into custom model training, and exploring batch processing capabilities.

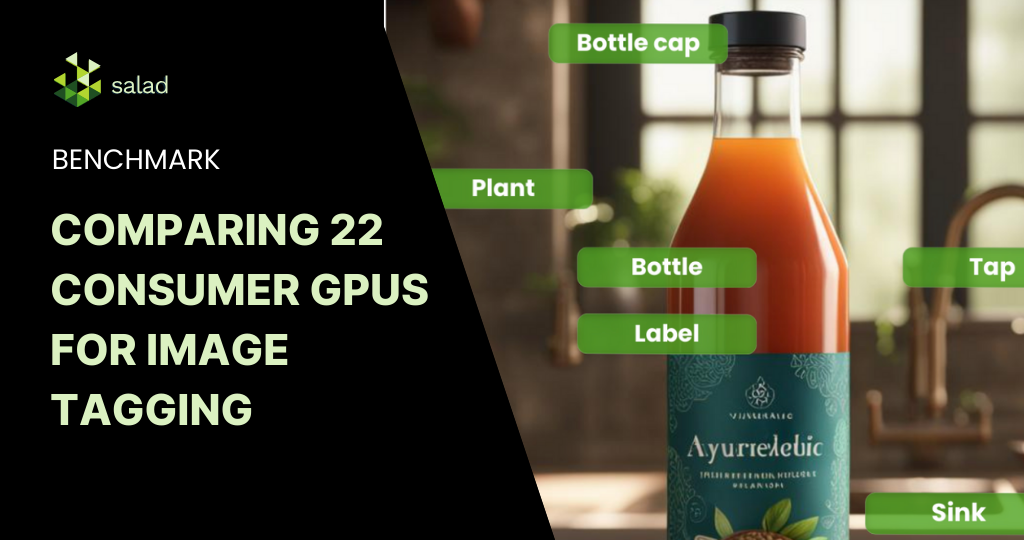

Comparing Price-Performance of 22 GPUs for AI Image Tagging (GTX vs RTX)

Older Consumer GPUs: A Perfect-Fit for AI Image Tagging In the current AI boom, there’s a palpable excitement around sophisticated image generation models like Stable Diffusion XL (SDXL) and the cutting-edge GPUs that power them. These models often require more powerful GPUs with larger amounts of vRAM. However, while the industry is abuzz with these advancements, we shouldn’t overlook the potential of older GPUs, especially for tasks like image tagging and search embedding generation. These processes, employed by image generation platforms like Civit.ai and Midjourney, play a crucial role in enhancing search capabilities and overall user experience. We leveraged Salad’s distributed GPU cloud to evaluate the cost-performance of this task across a wide range of hardware configurations. What is AI Image Tagging? AI image tagging is a technology that can automatically identify and label the content of images, such as objects, people, places, colors, and more. This helps users to organize, search, and discover their images more easily and efficiently. AI image tagging works: AI image tagging can be used for various purposes and applications, such as: Benchmarking 22 Consumer-Grade GPUs for AI Image Tagging In designing the benchmark, our primary objective was to ensure a comprehensive and unbiased evaluation. We selected a range of GPUs on SaladCloud, starting from the GTX 1050 and extending up to the RTX 4090, to capture a broad spectrum of performance capabilities. Each node in our setup was equipped with 16 vCPUs and 7 GB of RAM, ensuring a standardized environment for all tests. For the datasets, we chose two prominent collections from Kaggle: the AVA Aesthetic Visual Assessment and the COCO 2017 Dataset. These datasets offer a mix of aesthetic visuals and diverse object categories, providing a robust testbed for our image tagging and search embedding generation tasks. We used ConvNextV2 Tagger V2 to generate tags and ratings for images, and CLIP to generate embedding vectors. The tagger model used the ONNX runtime, while CLIP used Transformers with PyTorch. ONNX’s GPU capabilities are not a great fit for Salad, because of inconsistent Nvidia driver versions across the network, so we chose to go with the CPU runtime and to allocate 16 vCPUs for each node. PyTorch with Transformers works quite well across a large range of GPUs and driver versions with no additional configuration, so CLIP was run on GPU. Benchmark Results: GTX 1650 is the Surprising Winner As expected, our nodes with higher end GPUs took less time per image, with the flagship RTX 4090 offering the best performance. What is interesting, though, is that the median time per image is actually very similar for the GTX 1650 and the RTX 4090: 1 second. The best-case and worst-case performance of the 4090 is notably better. Weighting our findings by cost, we can confirm our intuition that the 1650 is a much better value at $0.02/hr than is the 4090 at $0.30/hr. While the older GPUs like the GTX 1650 have worse absolute performance compared to the RTX 4090, the great difference in price causes the older GPUs to be the best value, as long as your use case can withstand the additional latency. In fact, we see all GTX series GPUs outperforming all RTX GPUs in terms of images tagged per dollar. GTX Series: The Cost-Effective Option for AI Image Tagging with 3x More Images Tagged per Dollar In the ever-advancing realm of AI and GPU technology, the allure of the latest hardware often overshadows the nuanced decisions that drive optimal performance. Our analysis not only emphasizes the balance between raw performance and cost-effectiveness but also resonates with broader cloud best practices. Just as it’s pivotal not to oversubscribe to compute resources in cloud environments, it’s equally essential to avoid overcommitting to high-end GPUs when more cost-effective options can meet the requirements. The GTX 1650’s value proposition, especially for tasks with flexible latency needs, serves as a testament to this principle, delivering 3x as many images tagged per dollar as the RTX 4090. As we navigate the expanding AI applications landscape, making judicious hardware choices based on comprehensive real-world benchmarks becomes paramount. It’s a reminder that the goal isn’t always about harnessing the most powerful tools, but rather the most appropriate ones for the task and budget at hand. Run Your Image Tagging on Salad’s Distributed Cloud If you are running AI image tagging or any AI inference at scale, Salad’s distributed cloud has 10,000+ GPUs at the lowest price in the market. Sign up for a demo with our team to discuss your specific use case. Shawn RushefskyShawn Rushefsky is a passionate technologist and systems thinker with deep experience across a number of stacks. As Generative AI Solutions Architect at Salad, Shawn designs resilient and scalable generative ai systems to run on our distributed GPU cloud. He is also the founder of Dreamup.ai, an AI image generation tool that donates 30% of its proceeds to artists.

The AI GPU Shortage: How Gaming PCs Offer a Solution and a Challenge

Reliability in Times of AI GPU Shortage In the world of cloud computing, leading providers have traditionally utilized expansive, state-of-the-art data centers to ensure top-tier reliability. These data centers, boasting redundant power supplies, cooling systems, and vast network infrastructures, often promise uptime figures ranging from 99.9% to 99.9999% – terms you might have heard as “Three Nines” to “Six Nines.” For those who have engaged with prominent cloud providers, these figures are seen as a gold standard of reliability. However, the cloud computing horizon is expanding. Harnessing the untapped potential of idle gaming PCs is not only a revolutionary departure from conventional models but also a timely response to the massive compute demands of burgeoning AI businesses. The ‘AI GPU shortage‘ is everywhere today as GPU-hungry businesses are fighting for affordable, scalable computational power. Leveraging gaming PCs, which are often equipped with high-performance GPUs, provides an innovative solution to meet these growing demands. While this fresh approach offers unparalleled GPU inference rates and wider accessibility, it also presents a unique set of reliability factors to consider. The decentralized nature of a system built on individual gaming PCs does introduce variability. A single gaming PC might typically offer reliability figures between 90-95% (1 to 1.5 nines). At first glance, this might seem significantly different from the high “nines” many are familiar with. However, it’s crucial to recognize that we’re comparing two different models. While an individual gaming PC might occasionally face challenges, from software issues to local power outages, the collective strength of the distributed system ensures redundancy and robustness on a larger scale. When exploring our cloud solutions, it’s essential to view reliability from a broader perspective. Instead of concentrating solely on the performance of individual nodes, we highlight the overall resilience of our distributed system. This approach offers a deeper insight into our next-generation cloud infrastructure, blending cost-efficiency with reliability in a transformative way, perfectly suited for the computational needs of modern AI-driven businesses and to solve the ongoing AI GPU shortage. Exploring the New Cloud Landscape Embracing Distributed Systems Unlike traditional centralized systems, distributed clouds, particularly those harnessing the power of gaming PCs, operate on a unique paradigm. Each node in this setup is a personal computer, potentially scattered across the globe, rather than being clustered in a singular data center. Navigating Reliability Differences Nodes based on gaming PCs might individually present a reliability range of 90-95%. Various elements influence this: Unpacking the Benefits of Distributed Systems Global Redundancy Amidst Climate Change The diverse geographical distribution of nodes (geo-redundancy) offers an inherent safeguard against the increasing unpredictability of climate change. As extreme weather events, natural disasters, and environmental challenges become more frequent, centralized data centers in vulnerable regions are at heightened risk of disruptions. However, with nodes spread across various parts of the world, the distributed cloud system ensures that if one region faces climate-induced challenges or outages, the remaining global network can compensate, maintaining continuous availability. This decentralized approach not only ensures business continuity in the face of environmental uncertainties but also underscores the importance of forward-thinking infrastructure planning in our changing world. Seamless Scalability Distributed systems are designed for effortless horizontal scaling. Integrating more nodes into a group is a straightforward process. Fortifying Against Localized Disruptions Understanding the resilience against localized disruptions is pivotal when appreciating the strengths of distributed systems. This is especially evident when juxtaposed against potential vulnerabilities of a centralized model, like relying solely on a specific AWS region such as US-East-1. Catering to AI’s Growing Demands Harnessing idle gaming PCs is not just innovative but also a strategic response to the escalating computational needs of emerging AI enterprises. As AI technologies advance, the quest for affordable, scalable computational power intensifies. Gaming PCs, often equipped with high-end GPUs, present an ingenious solution to this challenge. Achieving Lower Latency The vast geographic distribution of nodes means data can be processed or stored closer to end-users, potentially offering reduced latency for specific applications. Cost-Effective Solutions Tapping into the dormant resources of idle gaming PCs can lead to substantial cost savings compared to the expenses of maintaining dedicated data centers. The Collective Reliability Factor While individual nodes might have a reliability rate of 90-95%, the combined reliability of the entire system can be significantly higher, thanks to redundancy and the sheer number of nodes. Consider this analogy: Flipping a coin has a 50% chance of landing tails. But flipping two coins simultaneously reduces the probability of both landing tails to 25%. For three coins, it’s 12.5%, and so on. Applying this to our nodes, if each node has a 10% chance of being offline, the probability of two nodes being offline simultaneously is just 1%. As the number of nodes increases, the likelihood of all of them being offline simultaneously diminishes exponentially. Thus, as the size of a network grows, the chances of the entire system experiencing downtime decrease dramatically. Even if individual nodes occasionally falter, the distributed nature of the system ensures its overall availability remains impressively high. Here is a real example: 24 hours sampled from a production AI image generation workload with 100 requested nodes. As we would expect, it’s fairly uncommon for all 100 to be running at the same time, but 100% of the time we have at least 82 live nodes. For this customer, 82 simultaneous nodes offered plenty of throughput to keep up with their own internal SLOs, and provided a 0-downtime experience. Gaming PCs as a Robust, High-Availability Solution for AI GPU Shortage While gaming PC nodes might seem to offer modest reliability compared to enterprise servers, when viewed as part of a distributed system, they present a robust, high-availability solution. This system, with its inherent benefits of redundancy, scalability, and resilience, can be expertly managed to provide a formidable alternative to traditional centralized systems. By leveraging the untapped potential of gaming PCs, we not only address the growing computational demands of industries like AI but also pave the way for a more resilient, cost-effective, and globally

Stable Diffusion XL (SDXL) Benchmark – 769 Images Per Dollar on Salad

Stable Diffusion XL (SDXL) Benchmark A couple months back, we showed you how to get almost 5000 images per dollar with Stable Diffusion 1.5. Now, with the release of Stable Diffusion XL, we’re fielding a lot of questions regarding the potential of consumer GPUs for serving SDXL inference at scale. The answer from our Stable Diffusion XL (SDXL) Benchmark: a resounding yes. In this benchmark, we generated 60.6k hi-res images with randomized prompts, on 39 nodes equipped with RTX 3090 and RTX 4090 GPUs. We saw an average image generation time of 15.60s, at a per-image cost of $0.0013. At 769 SDXL images per dollar, consumer GPUs on Salad’s distributed cloud are still the best bang for your buck for AI image generation, even when enabling no optimizations on Salad, and all optimizations on AWS. Architecture We used an inference container based on SDNext, along with a custom worker written in Typescript that implemented the job processing pipeline. The worker used HTTP to communicate with both the SDNext container and with our batch framework. Our simple batch processing framework comprises: Discover our open-source code for a deeper dive: Deployment on Salad We set up a container group targeting nodes with 4 vCPUs, 32GB of RAM, and GPUs with 24GB of vram, which includes the RTX 3090, 3090 ti, and 4090. We filled a queue with randomized prompts in the following format: We used ChatGPT to generate roughly 100 options for each variable in the prompt, and queued up jobs with 4 images per prompt. SDXL is composed of two models, a base and a refiner. We generated each image at 1216 x 896 resolution, using the base model for 20 steps, and the refiner model for 15 steps. You can see the exact settings we sent to the SDNext API. Results – 60,600 Images for $79 Over the benchmark period, we generated more than 60k images, uploading more than 90GB of content to our S3 bucket, incurring only $79 in charges from Salad, which is far less expensive than using an A10g on AWS, and orders of magnitude cheaper than fully managed services like the Stability API. We did see slower image generation times on consumer GPUs than on datacenter GPUs, but the cost differences give Salad the edge. While an optimized model on an A100 did provide the best image generation time, it was by far the most expensive per image of all methods evaluated. Grab a fork and see all the salads we made here on our GitHub page. Future Improvements For comparison with AWS, we gave them several advantages that we did not implement in the container we ran on Salad. In particular, torch.compile isn’t practical on Salad, because it adds 40+ minutes to the container’s start time, and Salad’s nodes are ephemeral. However, such a long start time might be an acceptable tradeoff in a datacenter context with dedicated nodes that can be expected to stay up for a very long time, so we did use torch.compile on AWS. Additionally, we used the default fp32 variational autoencoder (vae) in our salad worker, and an fp16 vae in our AWS worker, giving another performance edge to the legacy cloud provider. Unlike re-compiling the model at start time, including an alternate vae is something that would be practical to do on Salad, and is an optimization we would pursue in future projects. Salad Cloud – Still The Best Value for AI/ML Inference at Scale SaladCloud remains the most cost-effective platform for AI/ML inference at scale. The recent benchmarking of Stable Diffusion XL further highlights the competitive edge this distributed cloud platform offers, even as models get larger and more demanding. Shawn RushefskyShawn Rushefsky is a passionate technologist and systems thinker with deep experience across a number of stacks. As Generative AI Solutions Architect at Salad, Shawn designs resilient and scalable generative ai systems to run on our distributed GPU cloud. He is also the founder of Dreamup.ai, an AI image generation tool that donates 30% of its proceeds to artists.

GPU shortage isn’t the problem for Generative AI. GPU selection is.

Are we truly out of GPU compute power or are we just looking in the wrong places for the wrong type of GPUs? Recently, GPU shortage has been in the news everywhere. Just take a peek at the many articles on the topic here – The Information, IT Brew, Wall Street Journal, a16z. The explosive growth of generative AI has created a mad rush and long wait-times for AI-focused GPUs. For growing AI companies serving inference at scale, shortage of such GPUs is not the real problem. Selecting the right GPU is. AI Inference Scalability and the “right-sized” GPU Today’s ‘GPU shortage’ is really a function of inefficient usage and overpaying for GPUs that don’t align with the application’s needs for at-scale AI. The marketing machines at large cloud companies and hardware manufacturers have managed to convince developers that they ABSOLUTELY NEED the newest, most powerful hardware available in order to be a successful AI company. The A100s and H100s – perfect for training and advanced models – certainly deserve the tremendous buzz for being the fastest, most advanced GPUs. But there aren’t enough of these GPUs around and when they are available, it requires pre-paying or having an existing contract. A recent article by semianalysis has two points that confirm this: Meanwhile, GPU benchmark data suggests that there are many use cases where you don’t need the newest, most powerful GPUs. Consumer-grade GPUs (RTX3090, A5000, RTX4090, etc.) not only have high availability but also deliver more inferences per dollar significantly reducing your cloud cost. Selecting the “right sized” GPU at the right stage puts generative AI companies on the path to profitable, scalable growth, lower cloud costs and immune to ‘GPU shortages’. How to Find the “Right Sized” GPU When it comes to determining the “right sized” GPU for your application, there are several factors to consider. The first step is to evaluate the needs of your application at each stage of an AI model’s lifecycle. This means taking into account the varying compute, networking and storage requirements for tasks such as data preprocessing, training, and inference. Training Models During the training stage of machine learning models, it is common for large amounts of computational resources to be required. This includes the use of high-powered graphics processing units (GPUs) which can number from hundreds to thousands. These GPUs need to be connected through lightning-fast network connections in specially designed clusters to ensure that the machine learning models receive the necessary resources to train effectively. These specially designed clusters are optimized for the specific needs of machine learning and are capable of handling the intense computational demands required during the training stage. Example: Training Stable Diffusion (Approximate Cost: $600k) Serving Models (Inference) When it comes to serving your model, scalability and throughput are particularly crucial. By carefully considering these factors, you can ensure that your infrastructure is able to accommodate the needs of your growing user base. This includes being mindful of both budgetary constraints and architectural considerations. It’s worth noting that there are many examples in which the GPU requirements for serving inference are significantly lower than those for training. Despite this, many people continue to use the same GPUs for both tasks. This can lead to inefficiencies, as the hardware may not be optimized for the unique demands of each task. By taking the time to carefully assess your infrastructure needs and make necessary adjustments, you can ensure that your system is operating as efficiently and effectively as possible. Example 1: 6X more images per dollar on consumer-grade GPUs In a recent Stable Diffusion benchmark, consumer-grade GPUs generated 4X-8X more images per dollar compared to AI-focused GPUs. Most generative AI companies in the text-to-image space will be well served using consumer-grade GPUs for serving inference at scale. The economics and availability make them a winner for this use case. Example 2: Serving Stable Diffusion SDXL In the recent announcement introducing SDXL, Stability.ai noted that SDXL 0.9 can be run on a modern consumer GPU with just 16GB RAM and a minimum of 8GB of vRAM. Serving “Right Sized” AI Inference at Scale At Salad, we understand the importance of being able to serve AI/ML inference at scale without breaking the bank. That’s why we’ve created a globally distributed network of consumer GPUs that are designed from the ground up to meet your needs. Our customers have found that turning to SaladCloud instead of relying on large cloud computing providers has allowed them to not only save up to 90% of their cloud cost, but also improve their product offerings and reduce their dev ops time. Example: Generating 9M+ images in 24 hours for only $1872 In a recent benchmark for a customer, we generated 9.2 Million Stable Diffusion images in 24 hours for just $1872 – all on Nvidia’s 3000/4000 series GPUs. That’s ~5000 images per dollar leading to significant savings for this image generation company. With SaladCloud, you won’t have to worry about costly infrastructure maintenance or unexpected downtime. If it works on your system, it works on Salad. Instead, you can focus on what really matters – serving your growing user base while remaining profitable. To see if your use case is a fit for consumer-grade GPUs, contact our team today.