Stable Diffusion Inference Benchmark on consumer-grade GPUs

Are high-end consumer-grade GPUs good for Stable Diffusion inference at scale? If so, what would be the daily cost for generating millions of images? Do you really need A10s, A100s, or H100s? In this Stable Diffusion benchmark, we answer these questions by launching a fine-tuned, Stable Diffusion-based application on SaladCloud.

The result: We scaled up to 750 replicas (GPUs), and generated over 9.2 million images using 3.62 TB of storage in 24 hours for a total cost of $1,872.

By generating 4,954 images per dollar, this benchmark shows that generative AI inference at-scale on consumer-grade GPUs is practical, affordable, and a path to lower cloud costs. In this post, we’ll review the application architecture and model details, deployment on SaladCloud with prompt details and Inference results from the benchmark. In a subsequent post, we’ll provide a technical walkthrough and reference code that you can use to replicate this benchmark.

Application Architecture for Image Generation

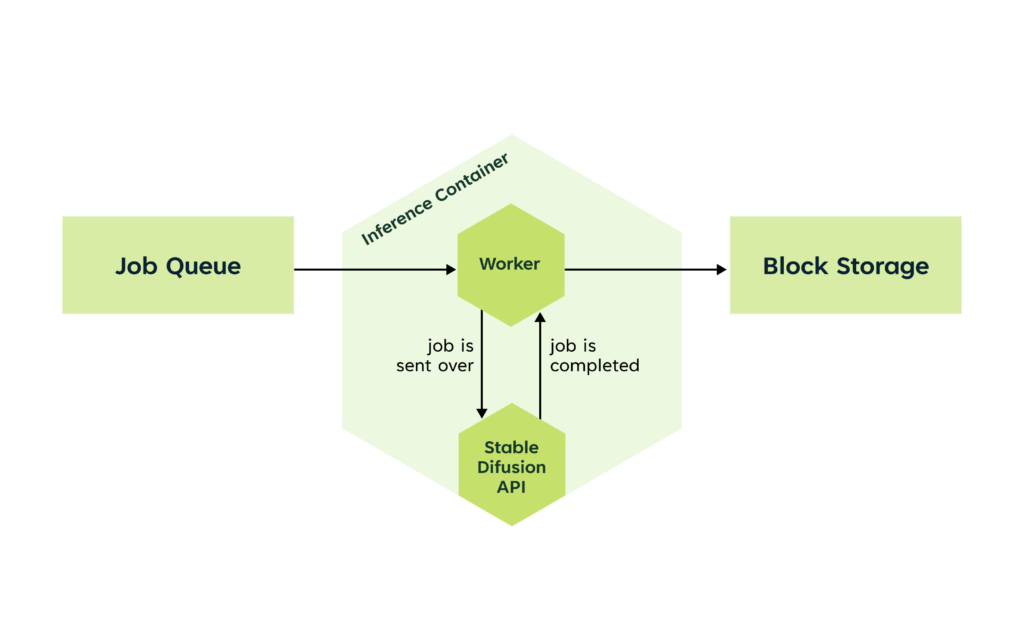

This benchmark was run for a SaaS-style, generative AI image generation tool for custom artwork. The end-users browse through categories of fine-tuned models, select a model, customize the prompt and parameters, and submit a job to generate one or more images. Once generated, the images are presented to the end-user. We helped develop the inference container to demonstrate the potential of SaladCloud nodes for this use case. The following diagram provides a high-level depiction of the system architecture:

The major components include a web-based application (frontend and backend), a dedicated job queue, an inference container, and a block storage service. Azure Queue Storage was used for the job queue and provided FIFO scheduling. Azure Blob Storage was used to provide the block storage. The following diagram provides a high-level depiction of the inference container architecture:

The container was based on Automatic1111’s Stable Diffusion Web UI. We created and added a custom worker to the container written in Go that implemented the job processing pipeline. The worker leveraged the Azure SDK for Go to communicate with the Azure Queue Storage and Azure Blob Storage services. The worker sequentially polls the queue for a job first. Then it uses the text2img API endpoint provided by the Stable Diffusion Web UI server to generate the images. Finally, the images are uploaded to the blob container.

Deployment of Stable Diffusion on SaladCloud

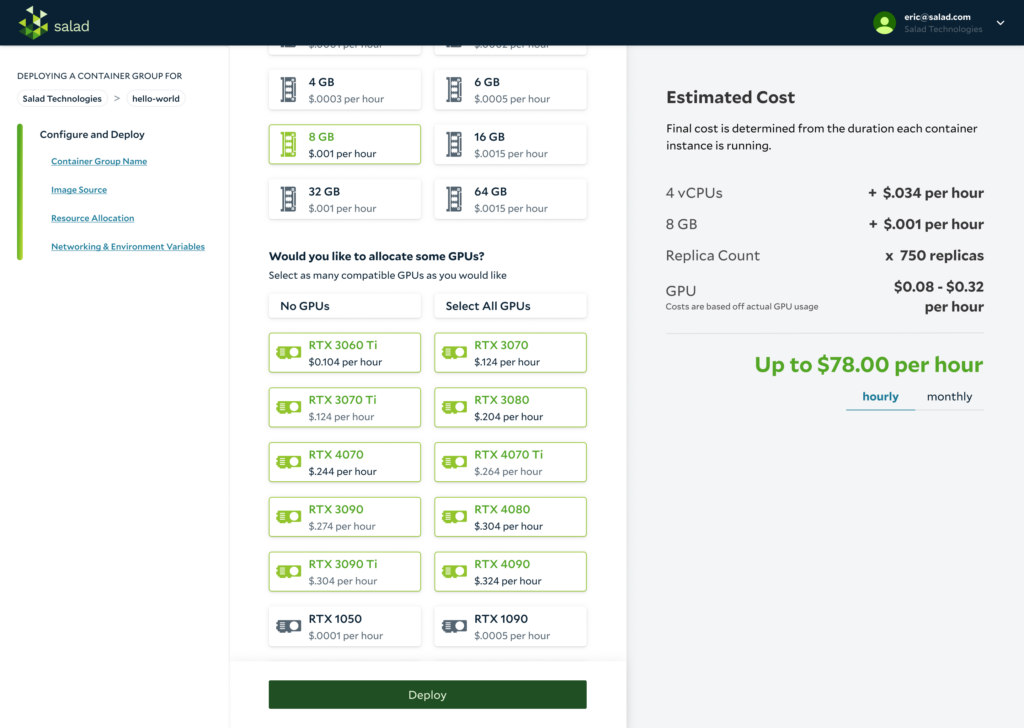

After building the inference container image, we created a SaladCloud managed container deployment using the web-based portal.

The deployment targeted 750 unique nodes with at least 4 vCPUs, at least 8GB of RAM, and a NVIDIA RTX 2000, 3000, or 4000 series GPU with at least 8GB of VRAM. Although SaladCloud allows for more targeted node selection, we decided to allow the scheduler to take the first available nodes with a compatible GPU based on unused network capacity. Also notable is that we did not restrict the geographic distribution of the deployment.

The job queue was filled with 10,000,000 variable image generation prompts. The following is an example of one of the jobs:

{“prompt”: “photo of a jump rope, <lora:magic-fantasy-forest-v2:0.35>, magic-fantasy-forest, digital art, most amazing artwork in the world, ((no humans)), volumetric light, soft balanced colours, forest scenery, vines, uhd, 8k octane render, magical, amazing, ethereal, intricate, intricate design, ultra sharp, shadows, cooler colors, trending on cgsociety, ((best quality)), ((masterpiece)), (detailed)”,

“negative_prompt”: “oversaturation, oversaturated colours, (deformed, distorted, disfigured:1.3), distorted iris, poorly drawn, bad anatomy, wrong anatomy, extra limb, missing limb, floating limbs, (mutated hands and fingers:1.4), disconnected limbs, mutation, mutated, ugly, disgusting, blurry, amputation, human, man, woman”,

“sampler_name”: “k_euler_a”, “steps”: 15, “cfg_scale”: 7}

Each job included a LoRA definition embedded in the text prompt, and each job used slightly varying concrete nouns and environment descriptions. The images produced were fixed to a size of 512×512 pixels, the sampler was fixed to Euler Ancestral, the number of steps fixed at 15, and the CFG scale fixed at 7.

Stable Diffusion Benchmark Results – 9M+ images in 24 hours at $1872

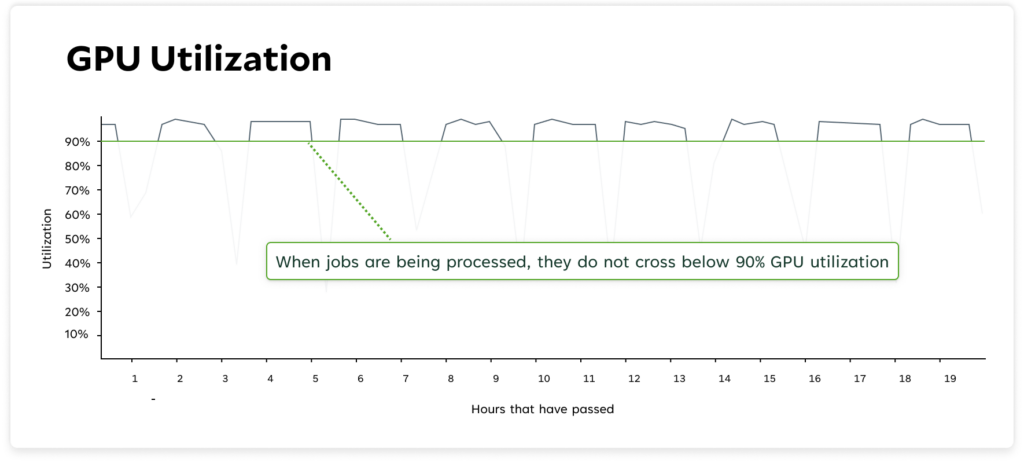

Over the 24 hour period, we processed a total of 9,274,913 image generation requests producing 3.62 TB of content. There were minimal processing failures (e.g. transient network issues), and only 523 jobs were reprocessed a second time. On average, we achieved an image generation cycle time of 7 seconds. The following mosaic is an example of just a few of the generated images:

It was fun browsing the generated images and observing the relative quality given the lack of time spent tuning and optimizing the parameters.

Future Improvements

This demonstration yielded exciting results showing that for Stable Diffusion Inference at scale, consumer-grade GPUs are not just capable but more cost-effective. That said, it was far from optimized. There are a number of technical tasks we could undertake to improve performance. Notably, we quickly implemented the worker and settled on a loop that sequentially pulled a job, generated an image, and uploaded the image. With this implementation, while we wait for network I/O, the GPU sits idle.

If we took an approach that pipelined jobs, eagerly pulling an extra one from the queue and parallelizing the network I/O with another image generation request, we estimate at least 10% improvement on overall job throughput. Without adjusting the total cost, this would push us up over 10M images generated in a day.

Generative AI and Inference Cost

Generative AI is a type of artificial intelligence that can create new content, like paintings, music, and writing. It works by learning from existing information to develop a model of patterns and relationships, and it has practical applications in generating unique and personalized content. It has become an increasingly popular technology thanks to the release of a number of open-source foundation models, many of which are developed on very large-scale datasets. Combined with the relative ease of developing and applying fine-tunings and the low-cost to run inference at-scale, the democratization of generative AI is unlocking new applications at an incredible pace.

Specifically related to inference, many models still require significant computational resources to efficiently generate content. However, the leaps in processing power and resource capacity of consumer-grade GPUs have caught up with many applications of generative AI models.

Large cloud computing providers are expensive with scarce access to enterprise-grade GPUs like A10s, A100s, or H100s. So a growing number of customers are turning to SaladCloud. Salad is a distributed cloud computing environment made up of the world’s most powerful gaming PCs.

A rapidly expanding application of generative AI includes creating images from a textual description. The “text to image” workflow can be used to generate assets for games, advertising/marketing campaigns, storyboards, and more. Stable Diffusion is one popular, open-source foundation model in the “text to image” space.

We are currently planning “image to image” and “audio to text” workflow demonstrations and benchmarks. You can read them on our blog here.

SaladCloud – the most affordable GPU cloud for Generative AI

This benchmark was run on SaladCloud, the world’s most affordable GPU cloud for Generative AI inference and other computationally intensive applications. With over 10k+ GPUs starting at $0.10/hour, SaladCloud has the lowest GPU prices in the market.

If high cloud bills and GPU availability are hampering your growth and profitability, SaladCloud can help with low prices and on-demand availability.

Recently, Daniel Sarfati, our Head of Product, sat down with Clay Pascal from LLM Utils to discuss how to choose the right GPU for Stable Diffusion. To learn more about GPU choice for Stable Diffusion, you can listen to the full audio interview.

Contact us for a personalized demo. To run your own models or pre-configured recipes of popular models (Stable Diffusion, Whisper, BERT, etc.), check out the SaladCloud Portal for a free trial.