Building upon the inference benchmark of Parakeet TDT 1.1B on SaladCloud and with our ongoing efforts to enhance the system architecture and implementation for batch jobs, we have achieved a 1000-fold cost reduction for AI transcription with Salad. This incredible cost-performance comes while maintaining the same level of accuracy as other managed transcription services.

YouTube is the world’s most widely used video-sharing platform, featuring a wealth of public content, including talks, news, courses, and more. There might be instances where you need to quickly understand updates of a global event or summarize a topic, but you may not be able to watch videos individually. In addition, the millions of YouTube videos are a gold-mine of training data for many AI applications. Many companies have a need to do large-scale, AI transcription in batch today but cost is a prohibiting factor.

In this deep dive, we will utilize publicly available YouTube videos as datasets and the high-speed ASR (Automatic Speech Recognition) model – Parakeet TDT 1.1B, and explore methods for constructing a batch-processing system for large-scale AI transcription of videos, using the substantial computational power of SaladCloud’s massive network of consumer GPUs across a global, high-speed distributed network.

How to download YouTube videos for batch AI transcription

The Python library, pytube, is a lightweight tool designed for handling YouTube videos, that can simplify our tasks significantly.

Firstly, pytube offers the APIs for interacting with YouTube playlists, which are collections of videos usually organized around specific themes. Using the APIs, we can retrieve all the video URLs within a specific playlist.

Secondly, prior to downloading a video, we can access its metadata, including details such as the title, video resolution, frames per second (fps), video codec, audio bit rate (abr), and audio codec, etc. If a video on YouTube supports an audio codec, we can enhance efficiency by exclusively downloading its audio. This approach not only reduces bandwidth requirements but also results in substantial time savings, given that the video size is typically ten times larger than its corresponding audio.

Bellow is the code snippet for downloading from YouTube:

from pytube import Playlist, YouTube

pl_url = "YOUTUBE_PLAYLIST_URL"

pl = Playlist(pl_url) # create a playlist object

for video_url in pl.video_urls: # get the each video URL within the playlist

yt = YouTube(video_url) # create a youtube object

audio = yt.streams.filter(only_audio=True).first() # define the filter to get the first audio

# download and save the audio file

audio.download(output_path="DOWNLOAD_DIR", filename="FILE_NAME") The audio files downloaded from YouTube primarily utilize the MPEG-4 audio (Mp4a) file format, commonly employed for streaming large audio tracks. We can convert these audio files from Mp4A to MP3, a format universally accepted by all ASR models.

Additionally, the duration of audio files sourced from YouTube exhibits considerable variation, ranging from a few minutes to tens of hours. To leverage massive and cost-effective GPU types, as well as to optimize GPU resource utilization, it is essential to segment all lengthy audio into fixed-length clips before inputting them into the model. The results can then be aggregated before returning the final transcription.

Advanced system architecture for massive video transcription

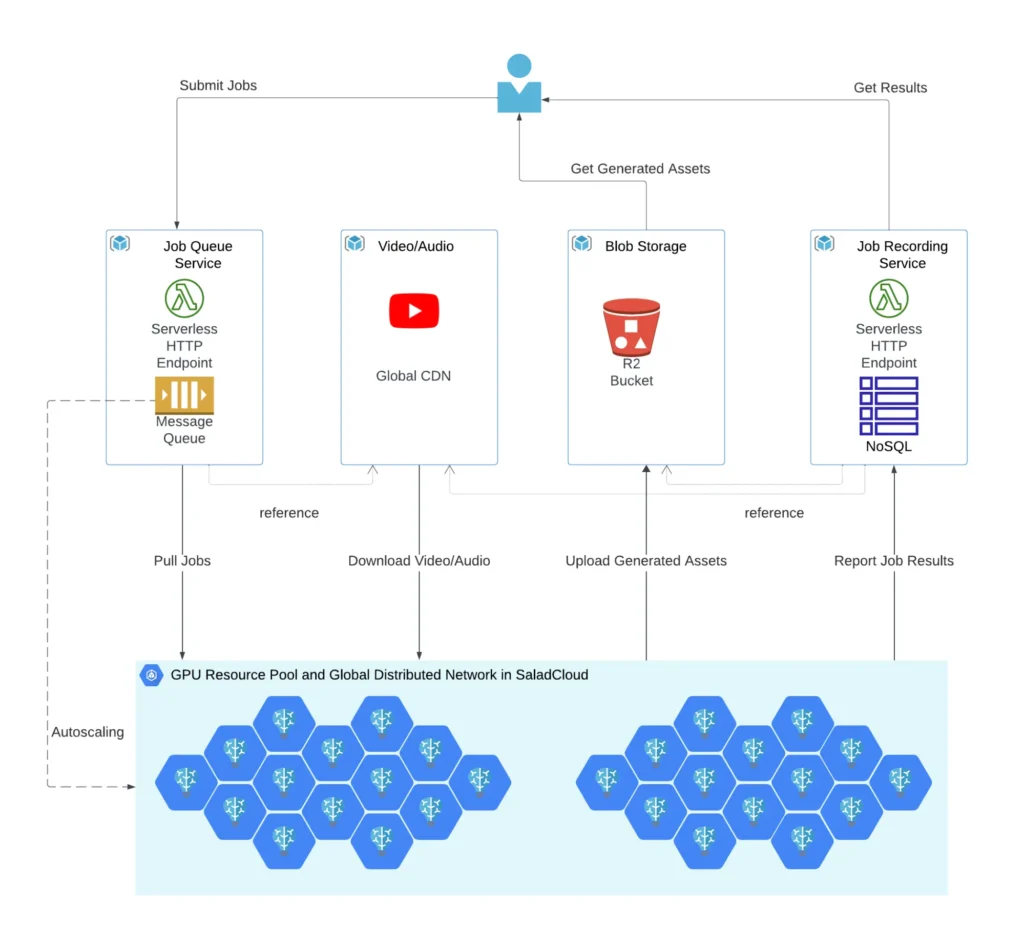

We can reuse our existing system architecture for audio transcription with a few enhancements:

- YouTube and its CDN: YouTube utilizes a Global Content Delivery Network (CDN) to distribute content efficiently. The CDN edge servers are strategically dispersed across various geographical locations, serving content in close proximity to users, and enhancing the speed and performance of applications.

- GPU Resource Pool and Global Distributed Network: Hundreds of Salad nodes, equipped with dedicated GPUs, are utilized for tasks such as downloading/uploading, pre-processing/post-processing and transcribing. These nodes assigned to GPU workloads are positioned within a global, high-speed distributed network infrastructure, and can effectively align with YouTube’s Global CDN, ensuring optimal system throughput.

- Cloud Storage: Generated assets stored in Cloudflare R2, which is AWS S3-compatible and incurs zero egress fees.

- Job Queue System: The Salad nodes retrieve jobs via the message queue like AWS SQS, providing the playlist or video URLs on YouTube. Direct access to YouTube without a job queue is also possible based on specific business logic.

- Job Recording System: Job results, including input URLs on YouTube, audio length, processing time, output text URLs on Cloudflare R2, etc., are stored in NoSQL databases like AWS DynamoDB.

In a long-term running batch-job system, implementing auto scaling becomes crucial. By continuously monitoring the job count in the message queue, we can dynamically adjust the number of Salad nodes or groups. This adaptive approach allows us to respond effectively to variations in system load, providing the flexibility to efficiently manage costs during lower demand periods or enhance throughput during peak loads.

Enhanced node implementation for both video and audio AI transcription

Modifications have been made on the node implementation, enabling it to handle both video and audio for AI transcription.

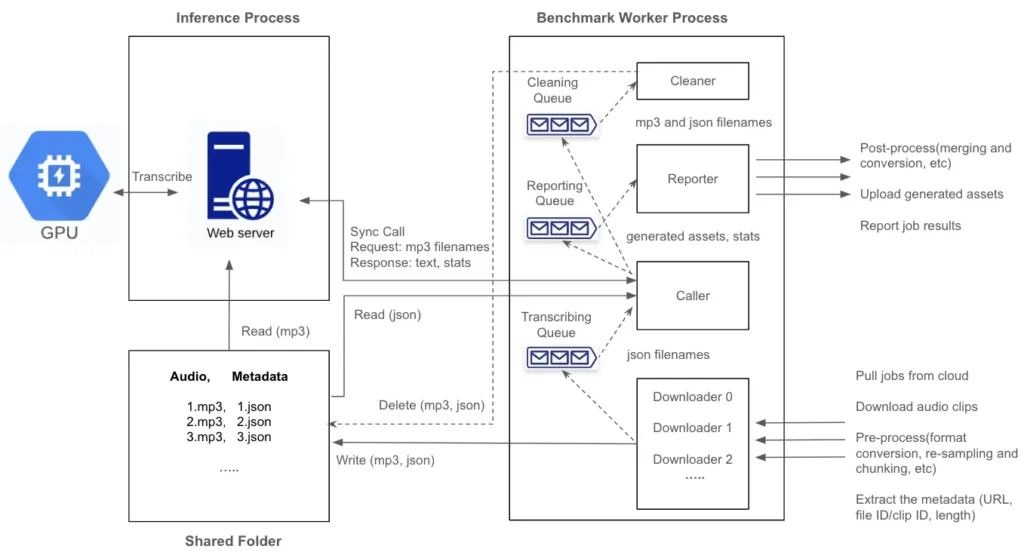

The inference process remains unchanged, running on a single thread and dedicated to GPU-based transcription. We have introduced additional features in the benchmark worker process, specifically designed to handle I/O and CPU-bound tasks and running multiple threads:

- The downloader threads are capable of downloading audio files from the video URLs on YouTube. They can also convert the audio file format from Mp4A to MP3, and segment all lengthy audio into fixed-length clips.

- The reporter thread has been updated to identify all the generated texts from clips of the same audio file and merge the texts before returning the final transcription and reporting the job result.

- The caller and cleaner threads have not undergone any changes and continue to operate as before. The caller thread reads a task from the transcribing queue and calls the inference service. The simplicity of the caller is crucial as it directly influences the inference performance. The cleaner thread remains responsible for deleting the processed audio files.

Running two processes to segregate GPU-bound tasks from I/O and CPU-bound tasks provides the flexibility to update each component independently. Introducing multiple threads in the benchmark worker process to handle different tasks eliminates waiting periods by fetching and preparing the next audio clips in advance while the current one is still being transcribed. Consequently, as soon as one audio clip is completed, the next is immediately ready for transcription. This approach not only reduces the overall processing time and increases system throughput but also results in more significant cost savings.

Massive YouTube video transcription tests on SaladCloud

We created a container group with 100 replicas (2vCPU and 12 GB RAM with 20+ different GPU types) in SaladCloud. The group was operational for approximately 10 hours, from 10:00 pm to 8:00 am PST during weekdays, successfully downloading and transcribing a total of 68,393 YouTube videos. The cumulative length of these videos amounted to 66,786 hours, with an average duration of 3,515 seconds.

Hundreds of Salad nodes from worldwide networks actively engaged in the tasks. They are all positioned in the high-speed networks, near the edges of the YouTube Global CDN (with an average latency of 33ms). This setup guarantees local access and ensures optimal system throughput for downloading content from YouTube.

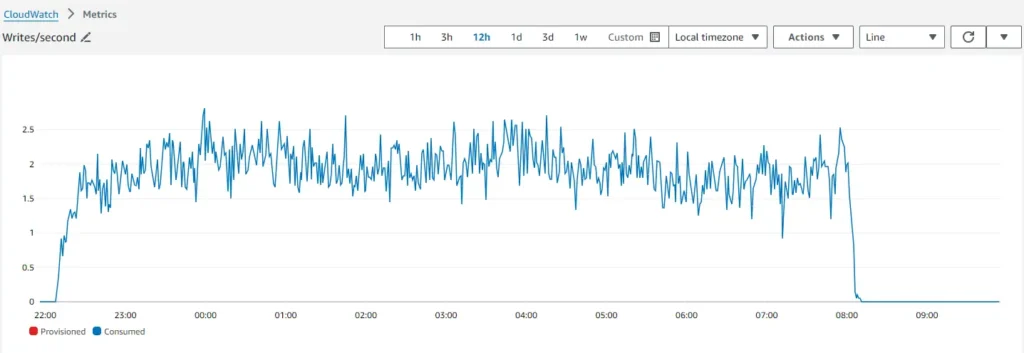

According to the AWS DynamoDB metrics, specifically writes per second, which serve as a monitoring tool for transcription jobs, the system reached its maximum capacity, processing approximately 2 videos (totaling 7500 seconds) per second, roughly one hour after the container group was launched.

The selected YouTube videos for this test vary widely in length, ranging from a few minutes to over 10 hours, causing notable fluctuations in the processing curve.

Let’s compare the results of the two benchmark tests conducted on Parakeet TDT 1.1B for audio and video:

| Parakeet Audio | Parakeet Video | |

|---|---|---|

| Datasets | English CommonVoice and Spoken Wikipedia Corpus English | YouTube videos include public talks, news and courses. |

| Average Input Length (s) | 12 | 3515 |

| Cost on SaladCloud (GPU Resource Pool and Global Distribution Network) | Around $100 100 Replicas (2vCPU,12GB RAM, 20+ GPU types) for 10 hours | Around $100 100 Replicas (2vCPU,12GB RAM, 20+ GPU types) for 10 hours |

| Cost on AWS and Cloudflare (Job Queue/Recording System and Cloud Storage ) | Around $20 | Around $2 |

| Node Implementation | 3 downloader threads; Segmentation of long audio; Merging texts. | Download audio from YouTube playlists and videos; 3 downloader threads; Segmentation of long audio; Format conversion from Mp4a to MP3; Merging texts. |

| Number of Transcription | 5,209,130 | 68,393 |

| Total Input Length (s) | 62,299,198 (17,305 hours) | 240,427,792 (66,786 hours ) |

| Most Cost-Effective GPU Type for transcribing long audio | RTX 3070 Ti 794 hours per dollar | RTX 3070 Ti 794 hours per dollar |

| System Throughput | Downloading and Transcribing 1731 seconds of audio per second. | Downloading and Transcribing 6679 seconds of video per second. |

As we utilize the identical ASR model, Parakeet TDT 1.1B, for transcribing segmented audio files, there isn’t a significant variance in the individual node’s transcribing performance and cost-effectiveness. However, the system throughput has surged by nearly 400% during the Pakakeet video test, primarily due to two key factors:

The average input length for the Parakeet video test is 3515 seconds, much longer than that of Parkaeet audio test. The efficiency of network transmission significantly improves when downloading large files as opposed to numerous small files.

In the Parakeet audio test, all global Salad nodes downloaded audio from Cloudflare R2 in the United States, and the prolonged latency significantly affected the transmission efficiency. In contrast, during the Parakeet video test, Salad nodes in various regions could download content locally from the nearest YouTube CDN edges. This local approach notably improved the system throughput.

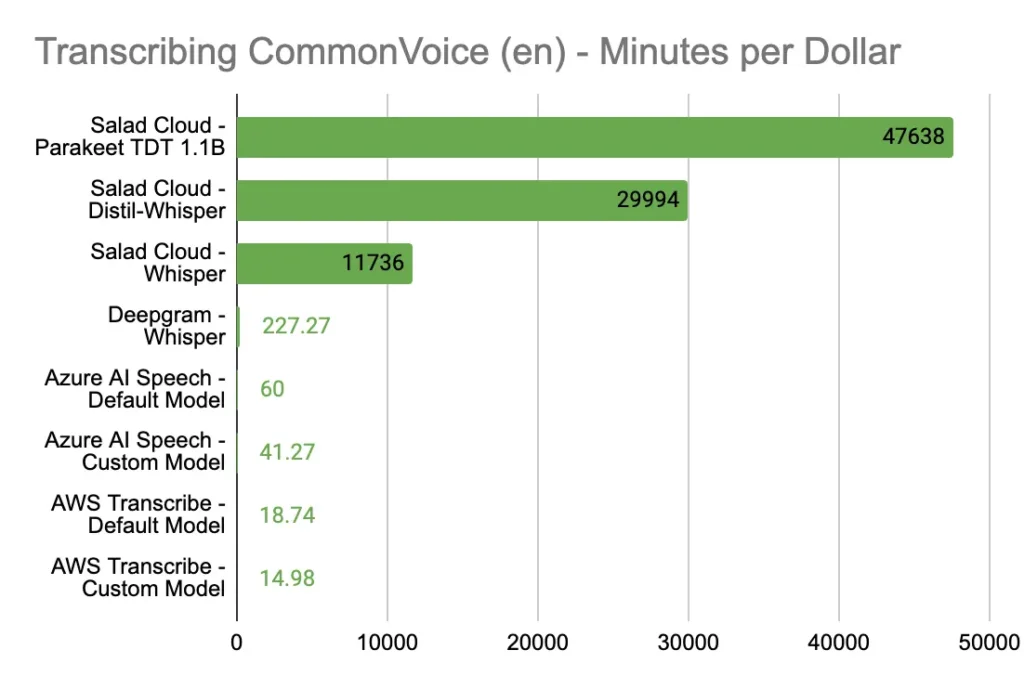

Performance comparison across different clouds

With the high-speed ASR model – Parakeet TDT 1.1B, and the advanced batch-processing framework leveraging hundreds of Consumer GPUs in SaladCloud and enhanced node implementation, we can deliver transcription services at a massive scale and at an extremely low cost, while maintaining the same level of accuracy as managed transcription services.

With the most cost-effective GPU type for the inference of Parakeet TDT 1.1B in SaladCloud, $1 dollar can transcribe 47,638 minutes of audio (nearly 800 hours), showcasing over 1000-fold cost reduction compared to other public cloud providers.

When collaborating with other global networks, such as YouTube, we can fully leverage the advantages of the global and high-speed network provided by SaladCloud, thereby maximizing the system throughput.

SaladCloud: The most affordable GPU cloud for batch jobs

Consumer GPUs are considerably more cost-effective than Data Center GPUs. While they may not be the ideal choice for extensive and large model training tasks, they are powerful enough for the inference of most AI models. With the right design and implementation, we can build a high-throughput, reliable and cost-effective system on top of them, offering massive inference services.

If your requirement involves hundreds of GPUs for large-scale inference or handling batch jobs on global networks, or you need tens of different GPU types within minutes for testing or research purposes, SaladCloud is the ideal platform.