How to run cog applications on SaladCloud

Introduction to Cog: Containers for Machine Learning Cog is an open-source tool designed to streamline the creation of inference applications for various AI models. It offers CLI tools, Python modules for prediction and fine-tuning, and an HTTP prediction server powered by FastAPI, letting you package models in a standard, production-ready container. When using the Cog HTTP prediction server, the main tasks involve defining two Python functions: one for loading models and initialization, and another for performing inference. The server manages all other aspects such as input/output, logging, health checks, and exception handling. It supports synchronous prediction, streaming output, and asynchronous prediction via webhooks. Its health-check feature is robust, offering various server statuses (STARTING, READY, BUSY, and FAILED) to ensure operational reliability. Some applications primarily use the Cog HTTP prediction server for easy implementation, while others also leverage its CLI tools to manage container images. By defining your environment with a ‘cog.yaml’ file and using Cog CLI tools, you can automatically generate a container image following best practices. This approach eliminates the need to write a Dockerfile from scratch, though it requires learning how to configure the cog.yaml file effectively. Running cog applications on SaladCloud All Cog-based applications can be easily run on SaladCloud, enabling you to build a massive, elastic and cost-effective AI inference system across SaladCloud’s global, high-speed network in just minutes. Here are the main scenarios and suggested approaches. You can find these described in detail on Salad’s documentation page. Scenario Description 1 Deploy the Cog-based images directly on SaladCloud Run the images without any modifications. If a load balancer is in place for inbound traffic, override the ENTRYPOINT and CMD settings of the images by using SaladCloud Portal or APIs, and configure the Cog HTTP prediction server to use IPv6 before starting the server. 2 Build a wrapper image for SaladCloud based on an existing Cog-based image Create a new Dockerfile without needing to modify the original dockerfile. Introduce new features and incorporate IPv6 support if applications need to process inbound traffic through a load balancer. 3 Build an image using Cog HTTP prediction server for SaladCloud Use only the Cog HTTP prediction server without its CLI tools.Directly work with the Dockerfile for flexible and precise control over the construction of the image. 4 Build an image using both Cog CLI tools and HTTP prediction server for SaladCloud Use Cog CLI tools and the cog.yaml file to manage the Dockerfile and image. Scenario 1: Deploy the Cog-based images directly on SaladCloud All Cog-based images can directly run on SaladCloud without any modifications, and you can leverage a load balancer or a job queue along with Cloud Storage for input and output. If applications need to process inbound traffic through a load balancer on SaladCloud, and since SaladCloud requires listening on an IPv6 port for inbound traffic while the Cog HTTP prediction server currently uses only IPv4 that cannot be configured via an environment variable, configuring the Cog server for IPv6 is necessary when running the images on SaladCloud. SaladCloud Portal and APIs offer the capability to override the ENTRYPOINT and CMD settings of an image at runtime. This allows configuring the Cog server to use IPv6 with a designated command before starting the server. For detailed steps, please refer to the guide [https://docs.salad.com/container-engine/guides/run-cog#scenario-1-deploy-the-cog-based-images-directly-on-saladcloud], where we use two images built by Replicate, BLIP and Whisper, as examples, and provide a walkthrough to run these Cog-based images directly on SaladCloud. Scenario 2: Build a wrapper image for SaladCloud based on an existing Cog-based image If you want to introduce new features, such as adding an I/O worker to the Cog HTTP prediction server, you can create a wrapper image based on an existing Cog-based image, without needing to modify its original Dockerfile. In the new Dockerfile, you can begin with the original image, introduce additional features and then incorporate IPv6 support if a load balancer is required. There are multiple approaches when working directly with the Dockerfile: you can execute a command to configure the Cog server for IPv6 during the image build process, or you can include a relay tool like socat to facilitate IPv6 to IPv4 routing. For detailed instructions, please consult the guide [https://docs.salad.com/container-engine/guides/run-cog#scenario-2-build-a-wrapper-image-for-saladcloud-based-on-an-existing-cog-based-image]. Scenario 3: Build an image using Cog HTTP prediction server for SaladCloud Using only the Cog HTTP prediction server without its CLI tools is feasible if you are comfortable writing a Dockerfile directly. This method offers flexible and precise control over the construction of the image. You can refer to this guide [https://docs.salad.com/container-engine/guides/deploy-blip-with-cog]: it provides the steps to leverage LAVIS, a Python Library for Language-Vision Intelligence, and Cog HTTP prediction server to create a BLIP image from scratch, and build a publicly-accessible and scalable inference endpoint on SaladCloud, capable of handling various image-to-text tasks. Scenario 4: Build an image using both Cog CLI tools and HTTP prediction server for SaladCloud If you prefer using Cog CLI tools to manage the Dockerfile and image, you can still directly build an image with socat support in case a load balancer is needed for inbound traffic. Please refer to this guide [https://docs.salad.com/container-engine/gateway/enabling-ipv6#ipv6-with-cog-through-socat] for more information. Alternatively, you can use the approaches described in Scenario 1 or Scenario 2 to add IPv6 support later. SaladCloud: The most affordable GPU Cloud for massive AI inference The open-source Cog simplifies the creation of AI inference applications with minimal effort. When deploying these applications on SaladCloud, you can harness the power of massive consumer-grade GPUs and SaladCloud’s global, high-speed network to build a highly efficient, reliable, and cost-effective AI inference system. If you are overpaying for APIs or need compute that’s affordable & scalable, this approach lets you switch workloads to Salad’s distributed cloud with ease.

Civitai powers 10 Million AI images per day with Salad’s distributed cloud

Civitai: The Home of Open-Source Generative AI “Our mission is rooted in the belief that AI resources should be accessible to all, not monopolized by a few” – Justin Maier, Founder & CEO of Civitiai. With an average of 26 Million visits per month, 10 Million users & more than 200,000 open-source models & embeddings, Civitai is definitely fulfilling their mission of making AI accessible to all.Launched in November 2022, Civitai is one of the largest generative AI communities in the world today helping users discover, create & share open-source, AI-generated media content easily. In Sep 2023, Civitai launched their Image Generator, a web-based interface for Stable Diffusion & one of the most used products on the platform today. This product allows users to input text prompts and receive image outputs. All the processing is handled by Civitai, requiring hundreds of Stable Diffusion appropriate GPUs on the cloud. Civitai’s challenge: Growing compute at scale without breaking the bank Civitai’s explosive growth, focus on GPU-hungry AI-generated media & the new image generator brought about big infrastructure challenges: Continuing with their current infrastructure provider and high-end GPUs would mean an exorbitant cloud bill, not to mention the scarcity of high-end GPUs. Democratized AI-media creation meets democratized computing on Salad To solve these challenges, Civitai partnered with Salad, a distributed cloud for AI/ML inference at scale. Like Civitai, Salad’s mission also lies in democratization – of cloud computing. SaladCloud is a fully people-powered cloud with 1 Million+ contributors on the network and 10K+ GPUs at any time. With over 100 Million consumer GPUs in the world lying unused for 18-22 hrs a day, Salad is on a mission to activate the largest pool of compute in the world for the lowest cost. Every day, thousands of voluntary contributors securely share compute resources with businesses like Civitai in exchange for rewards & gift cards. “For the past few months, Civitai has been at the forefront of Salad’s ambitious project, utilizing Salad’s distributed network of GPUs to power our on-site image generator. This partnership is more than just a technical alliance; it’s a testament to what we can achieve when we harness the power of community, democratization and shared goals”, says Chris Adler, Head of Partnerships at Civitai. Civitai’s partnership with Salad helped manage the scale & cost of their inference while supporting millions of users and model combinations on Salad’s unique distributed infrastructure. “By switching to Salad, Civitai is now serving inference on over 600 consumer GPUs to deliver 10 Million images per day and training more than 15,000 LoRAs per month” – Justin Maier, Civitai Scaling to hundreds of affordable GPUs on Salad Running Stable Diffusion at scale requires access to and effectively managing hundreds of GPUs, especially in the midst of a GPU-shortage. Also important is understanding the desired throughput to determine capacity needs and estimated operating cost for any infrastructure provider. As discussed in this blog, expensive, high-end GPUs like the A100 & H100 are perfect for training but when serving AI inference at scale for use cases like text-to-image, the cost-economics break down. You get better cost-performance on consumer-grade GPUs generating 4X-8X more images per dollar compared to AI-focused GPUs. With Salad’s network of thousands of Stable Diffusion compatible consumer GPUs, Civitai had access to the most cost effective GPUs, ready to keep up with the demands of its growing user base. Managing Hundreds of GPUs As Civitai’s Stable Diffusion deployment scaled, manually managing each individual instance wasn’t an option. Salad’s Solutions Team worked with Civitai to design an automated approach that can respond to changes in GPU demand and reduce the risk of human error. By leveraging our fully-managed container service, Civitai ensures that each and every instance of their application will run and perform consistently, providing a reliable, repeatable, and scalable environment for their production workloads. When demand changes, Civitai can simply scale up or down the number of replicas using the portal or our public API, further automating the deployment. Using Salad’s public API, Civitai monitors model usage and analyzes the queues, customizing their auto scaling rules to optimize both performance and cost. “Salad not only had the lowest prices in the market for image generation but also offered us incredible scalability. When we needed 200+ GPUs due to a surge in demand, Salad easily met that demand. Plus their technical support has been outstanding” – Justin Maier, Civitai Supporting Millions of unique model combinations on Salad at low cost Civitai’s image generation product supports millions of unique combinations of checkpoints, Low-Rank Adaptations (LoRAs), Variational Autoencoders (VAEs), and Textual Inversions. Users often combine these into a single image generation request. In order to efficiently manage these models on SaladCloud, Civitai combines a robust set of APIs and business logic with a custom container designed to respond dynamically to the image generation demands of their community. At its core, the Civitai image generation product is built around connecting queues with a custom Stable Diffusion container on Salad. This allows the system to gracefully handle surges in image generation requests and millions of unique combinations of models. Each container includes a Python Worker that communicates with Civitai’s Orchestrator. The Worker application is responsible for downloading models, automating image generation with a sequence of calls to a custom image generation pipeline, and uploading resulting images back to Civitai. By building a generic application that is controlled by the Civitai Orchestrator, the overall system automatically responds to the latest trending models and eliminates the need to manually deploy individual models. If an image generation request is received for a combination of models that are already loaded on one or more nodes, the worker will process that request as soon as the GPU is available. If the request is for a combination of models that are not currently loaded into a worker, the job is queued up until the models are downloaded and loaded, then the job is processed. Civitai & Salad – A perfect match for democratizing AI “We chose

AI Batch Transcription Benchmark: Transcribing 1 Million+ Hours of Videos in just 7 days for $1800

AI batch transcription benchmark: Speech-to-text at scale Building upon the inference benchmark of Parakeet TDT 1.1B for YouTube videos on SaladCloud and with our ongoing efforts to enhance the system architecture and implementation for batch jobs, we successfully transcribed over 66,000 hours of YouTube videos using a Salad container group consisting of 100 replicas running for 10 hours. Through this approach, we achieved a cost reduction of 1000-fold while maintaining the same level of accuracy as managed transcription services. In this deep dive, we will delve into what the system architecture, performance/throughput, time and cost would look like if we were to transcribe 1 Million hours of YouTube videos. Prior to the test, we created the dataset based on publicly available videos on YouTube. This dataset comprises over 4 Million video URLs sourced from more than 5000 YouTube channels, amounting to approximately 1.6 million hours of content. For detailed methods on collecting and processing data from YouTube on SaladCloud, as well as the reference design and example code, please refer to the guide. System architecture for AI batch transcription pipeline The transcription pipeline comprises: Job Injection Strategy and Job Queue Settings The provided job filler supports multiple job injection strategies. It can inject millions of hours of video URLs to the job queue instantly and remains idle until the pipeline completes all tasks. However, a potential issue with this approach arises when certain nodes in the pipeline experience downtime and fail to process and remove jobs from the queue. Consequently, these jobs may reappear for other nodes to attempt processing, potentially causing earlier injected jobs to be processed last, which may not be suitable for certain use cases. For this test, we used a different approach: initially, we injected a large batch of jobs into the pipeline every day and monitored progress. When the queue neared emptiness, we started injecting only a few jobs, with the goal of keeping the number of available jobs in the queue as low as possible for a period of time. This strategy allows us to prioritize completing older jobs before injecting a massive influx of new ones. For time-sensitive tasks, we can also implement autoscaling. By continually monitoring the job count in the queue, the job filler dynamically adjusts the number of Salad node groups. This adaptive approach ensures that specific quantities of tasks can be completed within a predefined timeframe while also offering the flexibility to manage costs efficiently during periods of reduced demand. For the job queue system, we set the AWS SQS Visibility Timeout to 1 hour. This allows sufficient time for downloading, chunking, buffering, and processing by most of the nodes in SaladCloud until final results are merged and uploaded to Cloudflare. If a node fails to process and remove polled jobs within the hour, the jobs become available again for other nodes to process. Additionally, the AWS SQS Retention Period is set to 2 days. Once the message retention quota is reached, messages are automatically deleted. This measure prevents jobs from lingering in the queue for an extended period without being processed for any reason, thereby avoiding wastage of node resources. Enhanced node implementation The transcription for audio involves resource-intensive operations on both CPU and GPU, including format conversion, re-sampling, segmentation, transcription and merging. The more CPU operations involved, the lower the GPU utilization experienced. Within each node in the GPU resource pool on SaladCloud, we follow best practices by utilizing two processes: The inference process concentrates on GPU operations and runs on a single thread. It begins by loading the model, warming up the GPU, and then listens on a TCP port by running a Python/FastAPI app on a Unicorn server. Upon receiving a request, it invokes the transcription inference and promptly returns the generated assets. The benchmark worker process primarily handles various I/O- and CPU-bound tasks, such as downloading/uploading, pre-processing, and post-processing. To maximize performance with better scalability, we adopt multiple threads to concurrently handle various tasks, with two queues created to facilitate information exchange among these threads. Thread Description Downloader In most cases, we require 3 threads to concurrently pull jobs from the job queue and download audio files from YouTube, and efficiently feed the inference server. It also performs the following pre-processing steps:1)Removal of bad audio files.2)Format conversion from Mp4A to MP3.3)Chunking very long audio into 10-minute clips.4)Metadata extraction (URL, file/clid ID, length). The pre-processed audio files are stored in a shared folder, and their metadata are added to the transcribing queue simultaneously. To prevent the download of excessive audio files, we enforce a maximum length limit on the transcribing queue. When the queue reaches its capacity, the downloader will sleep for a while. Caller It reads metadata from the transcribing queue, and subsequently sends a synchronous request, including the audio filename, to the inference server. Upon receiving the response, it forwards the generated texts along with statistics, and the transcribed audio filename to the reporting queue. The simplicity of the caller is crucial as it directly influences the inference performance. Reporter The reporter, upon reading the reporting queue, deletes the processed audio files from the shared folder and manages post-processing tasks, including merging results and calculating real-time factor and word count. Eventually, it uploads the generated assets to Cloudflare, reports the job results to AWS DynamoDB and deletes the processed jobs from AWS SQS. By running two processes to segregate GPU-bound tasks from I/O and CPU-bound tasks, and fetching and preparing the next audio clips concurrently and in advance while the current one is still being transcribed, we can eliminate any waiting period. After one audio clip is completed, the next is immediately ready for transcription. This approach not only reduces the overall processing time for batch jobs but also leads to even more significant cost savings. 1 Million hours of YouTube video batch transcription tests on SaladCloud We established a container group with 100 replicas, each equipped with 2vCPU, 12 GB RAM, and a GPU with 8GB or more VRAM on SaladCloud.

AI Transcription Benchmark: 1 Million Hours of Youtube Videos with Parakeet TDT 1.1B for Just $1260, a 1000-fold cost reduction

Building upon the inference benchmark of Parakeet TDT 1.1B on SaladCloud and with our ongoing efforts to enhance the system architecture and implementation for batch jobs, we have achieved a 1000-fold cost reduction for AI transcription with Salad. This incredible cost-performance comes while maintaining the same level of accuracy as other managed transcription services. YouTube is the world’s most widely used video-sharing platform, featuring a wealth of public content, including talks, news, courses, and more. There might be instances where you need to quickly understand updates of a global event or summarize a topic, but you may not be able to watch videos individually. In addition, the millions of YouTube videos are a gold-mine of training data for many AI applications. Many companies have a need to do large-scale, AI transcription in batch today but cost is a prohibiting factor. In this deep dive, we will utilize publicly available YouTube videos as datasets and the high-speed ASR (Automatic Speech Recognition) model – Parakeet TDT 1.1B, and explore methods for constructing a batch-processing system for large-scale AI transcription of videos, using the substantial computational power of SaladCloud’s massive network of consumer GPUs across a global, high-speed distributed network. How to download YouTube videos for batch AI transcription The Python library, pytube, is a lightweight tool designed for handling YouTube videos, that can simplify our tasks significantly. Firstly, pytube offers the APIs for interacting with YouTube playlists, which are collections of videos usually organized around specific themes. Using the APIs, we can retrieve all the video URLs within a specific playlist. Secondly, prior to downloading a video, we can access its metadata, including details such as the title, video resolution, frames per second (fps), video codec, audio bit rate (abr), and audio codec, etc. If a video on YouTube supports an audio codec, we can enhance efficiency by exclusively downloading its audio. This approach not only reduces bandwidth requirements but also results in substantial time savings, given that the video size is typically ten times larger than its corresponding audio. Bellow is the code snippet for downloading from YouTube: The audio files downloaded from YouTube primarily utilize the MPEG-4 audio (Mp4a) file format, commonly employed for streaming large audio tracks. We can convert these audio files from Mp4A to MP3, a format universally accepted by all ASR models. Additionally, the duration of audio files sourced from YouTube exhibits considerable variation, ranging from a few minutes to tens of hours. To leverage massive and cost-effective GPU types, as well as to optimize GPU resource utilization, it is essential to segment all lengthy audio into fixed-length clips before inputting them into the model. The results can then be aggregated before returning the final transcription. Advanced system architecture for massive video transcription We can reuse our existing system architecture for audio transcription with a few enhancements: In a long-term running batch-job system, implementing auto scaling becomes crucial. By continuously monitoring the job count in the message queue, we can dynamically adjust the number of Salad nodes or groups. This adaptive approach allows us to respond effectively to variations in system load, providing the flexibility to efficiently manage costs during lower demand periods or enhance throughput during peak loads. Enhanced node implementation for both video and audio AI transcription Modifications have been made on the node implementation, enabling it to handle both video and audio for AI transcription. The inference process remains unchanged, running on a single thread and dedicated to GPU-based transcription. We have introduced additional features in the benchmark worker process, specifically designed to handle I/O and CPU-bound tasks and running multiple threads: Running two processes to segregate GPU-bound tasks from I/O and CPU-bound tasks provides the flexibility to update each component independently. Introducing multiple threads in the benchmark worker process to handle different tasks eliminates waiting periods by fetching and preparing the next audio clips in advance while the current one is still being transcribed. Consequently, as soon as one audio clip is completed, the next is immediately ready for transcription. This approach not only reduces the overall processing time and increases system throughput but also results in more significant cost savings. Massive YouTube video transcription tests on SaladCloud We created a container group with 100 replicas (2vCPU and 12 GB RAM with 20+ different GPU types) in SaladCloud. The group was operational for approximately 10 hours, from 10:00 pm to 8:00 am PST during weekdays, successfully downloading and transcribing a total of 68,393 YouTube videos. The cumulative length of these videos amounted to 66,786 hours, with an average duration of 3,515 seconds. Hundreds of Salad nodes from worldwide networks actively engaged in the tasks. They are all positioned in the high-speed networks, near the edges of the YouTube Global CDN (with an average latency of 33ms). This setup guarantees local access and ensures optimal system throughput for downloading content from YouTube. According to the AWS DynamoDB metrics, specifically writes per second, which serve as a monitoring tool for transcription jobs, the system reached its maximum capacity, processing approximately 2 videos (totaling 7500 seconds) per second, roughly one hour after the container group was launched. The selected YouTube videos for this test vary widely in length, ranging from a few minutes to over 10 hours, causing notable fluctuations in the processing curve. Let’s compare the results of the two benchmark tests conducted on Parakeet TDT 1.1B for audio and video: Parakeet Audio Parakeet Video Datasets English CommonVoice and Spoken Wikipedia Corpus English YouTube videos include public talks, news and courses. Average Input Length (s) 12 3515 Cost on SaladCloud (GPU Resource Pool and Global Distribution Network) Around $100100 Replicas (2vCPU,12GB RAM,20+ GPU types) for 10 hours Around $100100 Replicas (2vCPU,12GB RAM,20+ GPU types) for 10 hours Cost on AWS and Cloudflare(Job Queue/Recording System and Cloud Storage ) Around $20 Around $2 Node Implementation 3 downloader threads;Segmentation of long audio; Merging texts. Download audio from YouTube playlists and videos;3 downloader threads;Segmentation of long audio;Format conversion from Mp4a to MP3;Merging texts. Number of Transcription 5,209,130 68,393 Total

Text-to-Speech (TTS) API Alternative: Self-Managed OpenVoice vs MetaVoice Comparison

A cost-effective alternative to Text-to-speech APIs In the realm of text-to-speech (TTS) technology, two open-source models have recently garnered everyone’s attention: OpenVoice and MetaVoice. Each model has unique capabilities in voice synthesis, but both were recently open sourced. We conducted benchmarks for both models on SaladCloud showing a world of efficiency and cost-effectiveness, highlighting the platform’s ability to democratize advanced voice synthesis technologies. The benchmarks focused on self-managed OpenVoice and MetaVoice as a far cheaper alternative to popular text to speech APIs. In this article, we will delve deeper into each of these models, exploring their distinctive features, capabilities, price, speed, quality and how they can be used in real-world applications. Our goal is to provide a comprehensive understanding of these technologies, enabling you to make informed decisions about which model best suits your voice synthesis requirements. If you are serving TTS inference at scale, utilizing a self-managed, open-source model framework on a distributed cloud like Salad is 50-90% cheaper compared to APIs. Efficiency and affordability on Salad’s distributed cloud Recently, we benchmarked OpenVoice and MetaVoice on SaladCloud’s global network of distributed GPUS. Tapping into thousands of latent consumer GPUs, Salad’s GPU prices start from $0.02/hour. With more than 1 Million PCs on the network, Salad’s distributed infrastructure provides the computational power needed to process large datasets swiftly, while its cost-efficient pricing model ensures that businesses can leverage these advanced technologies without breaking the bank. Running OpenVoice on Salad comes out to be 300 times cheaper than Azure Text to Speech service. Similarly, MetaVoice on Salad is 11X cheaper than AWS Polly Long Form. A common thread: Open Source Text-to-Speech innovation OpenVoice TTS, OpenVoice Cloning, and MetaVoice share a foundational principle: they are all open-source text-to-speech models. These models are not only free to use but also offer transparency in their development processes. Users can inspect the source code, contribute to improvements, and customize the models to fit their specific needs. With the source code, developers and researchers can customize and enhance these models to suit their specific needs, driving innovation in the TTS domain. A closer look at each model: OpenVoice and MetaVoice OpenVoice is an open-source, instant voice cloning technology that enables the creation of realistic and customizable speech from just a short audio clip of a reference speaker. Developed by MyShell.ai, OpenVoice stands out for its ability to replicate the voice’s tone color while offering extensive control over various speech attributes such as emotion and rhythm. OpenVoice voice replication process involvesseveral key steps that can be used both together or separately: OpenVoice Base TTS OpenVoice’s base Text-to-Speech (TTS) engine is a cornerstone of its framework, efficiently transforming written text into spoken words. This component is particularly valuable in scenarios where the primary goal is text-to-speech conversion without the need for specific voice toning or cloning. The ease with which this part of the model can be isolated and utilized independently makes it a versatile tool, ideal for applications that demand straightforward speech synthesis. OpenVoice Benchmark: 6 Million+ words per $ on Salad OpenVoice Cloning Building upon the base TTS engine, this feature adds a layer of sophistication by enabling the replication of a reference speaker’s unique vocal characteristics. This includes the extraction and embodiment of tone color, allowing for the creation of speech that not only sounds natural but also carries the emotional and rhythmic nuances of the original speaker. OpenVoice’s cloning capabilities extend to zero-shot cross-lingual voice cloning, a remarkable feature that allows for the generation of speech in languages not present in the training dataset. This opens up a world of possibilities for multilingual applications and global reach. MetaVoice-1B MetaVoice-1B is a robust 1.2 billion parameter base model, trained on an extensive dataset of 100,000 hours of speech. Its design is focused on achieving natural-sounding speech with an emphasis on emotional rhythm and tone in English. A standout feature of MetaVoice 1B is its zero-shot cloning capability for American and British voices, requiring just 30 seconds of reference audio for effective replication. The model also supports cross-lingual voice cloning with fine-tuning, showing promising results with as little as one minute of training data for Indian speakers. MetaVoice-1B is engineered to capture the nuances of emotional speech, ensuring that the synthesized output resonates with listeners on a deeper level. MetaVoice Benchmark: 23,300 words per $ on Salad Benchmark results: Price comparison of voice synthesis models on SaladCloud The following table presents the results of our benchmark tests, where we ran the models OpenVoice TTS, OpenVoice Cloning, and MetaVoice on SaladCloud GPUs. For consistency, we used the text from Isaac Asimov’s book “Robots and Empire”, available on Internet Archive: Digital Library of Free & Borrowable Books, Movies, Music & Wayback Machine , comprising approximately 150,000 words, and processed it through all compatible Salad GPUs. Model Name Most Cost-EfficientGPU Words per Dollar Second Most CostEfficient GPU Words per Dollar OpenVoice TTS RTX 2070 6.6 Million GTX 1650 6.1 million OpenVoice Cloning GTX 1650 4.7 Million RTX 2070 4.02 million MetaVoice RTX 3080 23,300 RTX 3080 Ti 15,400 Table: Comparison of OpenVoice Text-to-Speech, OpenVoice Cloning and MetaVoice The benchmark results clearly indicate that OpenVoice, both in its TTS and Cloning variants, is significantly more cost-effective compared to MetaVoice. The OpenVoice TTS model, when run on an RTX 2070 GPU, achieves an impressive 6.6 Million words per dollar, making it the most efficient option among the tested models. The price of using RTX2070 on SaladCloud is $0.06/hour which together with vCPU and RAM we used got us to a total of $0.072/hour. OpenVoice Cloning also demonstrates strong cost efficiency, particularly when using the GTX 1650, which processes 4.7 Million words per dollar. This is a notable advantage for applications requiring less robotic voice. In contrast, MetaVoice’s performance on the RTX 3080 and RTX 3080 Ti GPUs yields significantly fewer words per dollar, indicating a higher cost for processing speech. However, don’t rush to dismiss MetaVoice just yet; upcoming comparisons may offer a different perspective that could sway your opinion.

MetaVoice AI Text-to-Speech (TTS) Benchmark: Narrate 100,000 words for only $4.29 on Salad

Note: Do not miss out on listening to voice clones of 10 different celebrities reading Harry Potter and the Sorcerer’s Stone towards the end of the blog. Introduction to MetaVoice-1B MetaVoice-1B is an advanced text-to-speech (TTS) model boasting 1.2 billion parameters, meticulously trained on a vast corpus of 100,000 hours of speech. Engineered with a focus on producing emotionally resonant English speech rhythms and tones, MetaVoice-1B stands out for its accuracy and realistic voice synthesis. One standout feature of MetaVoice-1B is its ability to perform zero shot voice cloning. This feature requires only a 30-second audio snippet to accurately replicate American & British voices. It also includes cross-lingual cloning capabilities demonstrated with as little as one minute of training data for Indian accents. A versatile tool released under the permissive Apache 2.0 license, MetaVoice-1B is designed for long-form synthesis. The architecture of MetaVoice-1B MetaVoice-1B’s architecture is a testament to its innovative design. Combining causal GPT structures and non-causal transformers, it predicts a series of hierarchical EnCodec tokens from text and speaker information. This intricate process includes condition-free sampling, enhancing the model’s cloning proficiency. The text is processed through a custom-trained BPE tokenizer, optimizing the model’s linguistic capabilities without the need for predicting semantic tokens, a step often deemed necessary in similar technologies. MetaVoice cloning benchmark methodology on SaladCloud GPUs Encountered Limitations and Adaptations During the evaluation, we encountered limitations with the maximum length of text that MetaVoice could process in one go. The default token limit is set to 2048 tokens per batch. But we noticed that even with a smaller number of tokens, the model starts to act not as expected. To solve the limit issue, we had to preprocess our data by dividing the text into smaller segments, specifically two-sentence pieces, to accommodate the model’s capabilities. To break the text into sentences, we used Punkt Sentence Tokenizer. The text source remained consistent with previous benchmarks, utilizing Isaac Asimov’s “Robots and Empire,” available from Internet Archive: Digital Library of Free & Borrowable Books, Movies, Music & Wayback Machine. For the voice cloning component, we utilized a one-minute sample of Benedict Cumberbatch’s narration. The synthesized output very closely mirrored the distinctive qualities of Cumberbatch’s narration, demonstrating MetaVoice’s cloning capabilities which are the best we’ve yet seen. Here is a voice cloning example featuring Benedict Cumberbatch: GPU Specifications and Selection MetaVoice documentation specifies the need for GPUs with VRAM of 12GB or more. Despite this, our trials included GPUs with lower VRAM, which still performed adequately. But this required a careful selection process from Salad’s GPU fleet to ensure compatibility. We standardized each node with 1 vCPU and 8GB of RAM to maintain a consistent testing environment. Benchmarking Workflow The benchmarking procedure was incorporating multi-threaded operations to enhance efficiency. The process involved parallel downloading of parts of text and the voice reference sample from Azure and processing text through MetaVoice model. Completing the cycle, the resulting audio was then uploaded back to Azure. This comprehensive workflow was designed to simulate a typical application scenario, providing a realistic assessment of MetaVoice’s operational performance on Salad Cloud GPUs. Benchmark Findings: Cost-Performance and Inference Speed Words per Dollar Efficiency Our benchmarking results reveal that the RTX 3080 GPU leads in terms of cost-efficiency for MetaVoice, achieving an impressive 23,300 words per dollar. The RTX 3080 Ti follows closely with 15,400 words per dollar. These figures highlight the resource-intensive nature of MetaVoice, requiring powerful GPUs to operate efficiently. Speed Analysis and GPU Requirements Our speed analysis revealed that GPUs with 10GB or more VRAM performed consistently, processing approximately 0.8 to 1.2 words per second. In contrast, GPUs with lower VRAM demonstrated significantly reduced performance, rendering them unsuitable for running MetaVoice. This aligns with the developers’ recommendation of using GPUs with at least 12GB VRAM to ensure optimal functionality. Cost Analysis for an Average Book To provide a practical perspective, let’s consider the cost of converting an average book into speech using MetaVoice on Salad Cloud GPUs. Assuming an average book contains approximately 100,000 words: Creating a narration of “Harry Potter and the Sorcerer’s Stone” by Benedict Cumberbatch would cost around $3.30 with an RTX 3080 and $5.00 with an RTX 3080 Ti. Here is an example of a voice clone of Benedict Cumberbatch reading Harry Potter: Notice that we did not change any model parameters, or added business logic. We only added batch processing sentence by sentence. We also cloned other celebrity voices to read out the first page of Harry Potter and the Sorcerer’s Stone. Here’s a collection of different voice clones reading Harry Potter using MetaVoice. MetaVoice GPU Benchmark on SaladCloud – Conclusion In conclusion, the combination of MetaVoice and SaladCloud GPUs presents a cost-effective and high-quality solution for text-to-speech and voice cloning projects. Whether for large-scale audiobook production or specialized projects like celebrity-narrated books, this technology offers a new level of accessibility and affordability in voice synthesis. As we move forward, it will be exciting to see how these advancements continue to shape the landscape of digital content creation.

Parakeet TDT 1.1B Inference Benchmark on SaladCloud: 1,000,000 hours of transcription for Just $1260

Parakeet TDT 1.1B GPU benchmark The Automatic Speech Recognition (ASR) model, Parakeet TDT 1.1B, is the latest addition to NVIDIA’s Parakeet family. Parakeet TDT 1.1B boasts unparalleled accuracy and significantly faster performance compared to other models in the same family. Using our latest batch-processing framework, we conducted comprehensive tests with Parakeet TDT 1.1B against extensive datasets, including English CommonVoice and Spoken Wikipedia Corpus English(Part1, Part2). In this detailed GPU benchmark, we will delve into the design and construction of a high-throughput, reliable and cost-effective batch-processing system within SaladCloud. Additionally, we will conduct a comparative analysis of the inference performance between Parakeet TDT 1.1B and other popular ASR models like Whisper Large V3 and Distil-Whisper Large V2. Advanced system architecture for batch jobs Our latest batch processing framework consists of: HTTP handlers using AWS Lambda or Azure Functions can be implemented for both the Job Queue System and the Job Recording System. This provides convenient access, eliminating the necessity of installing a specific Cloud Provider’s SDK/CLIs within the application container image. We aimed to keep the framework components fully managed and serverless to closely simulate the experience of using managed transcription services. A decoupled architecture provides the flexibility to choose the best and most cost-effective solution for each component from the industry. Enhanced Node Implementation for High Performance and Throughout We have refined the node implementation to further enhance the system performance and throughput. Within each node in the GPU resource pool in SaladCloud, we follow best practices by utilizing two processes: 1) Inference Process The transcription for audio involves resource-intensive operations on both CPU and GPU, including format conversion, re-sampling, segmentation, transcription and merging. The more CPU operations involved, the lower the GPU utilization experienced. While having the capacity to fully leverage the CPU, multiprocessing or multithreading-based concurrent inference over a single GPU might limit optimal GPU cache utilization and impact performance. This is attributed to each inference running at its own layer or stage. The multiprocessing approach also consumes more VRAM as every process requires a CUDA context and loads its own model into GPU VRAM for inference. Following best practices, we delegate more CPU-bound pre-processing and post-processing tasks to the benchmark worker process. This allows the inference process to concentrate on GPU operations and run on a single thread. The process begins by loading the model, warming up the GPU, and then listens on a TCP port by running a Python/FastAPI app on a Unicorn server. Upon receiving a request, it invokes the transcription inference and promptly returns the generated assets. Batch inference can be employed to enhance performance by effectively leveraging GPU cache and parallel processing capabilities. However, it requires more VRAM and might delay the return of the result until every single sample in the input is processed. The choice of using batch inference and determining the optimal batch size depends on model, dataset, hardware characteristics and use case. This also requires experimentation and ongoing performance monitoring. 2) Benchmark Worker Process The benchmark worker process primarily handles various I/O- and CPU-bound tasks, such as downloading/uploading, pre-processing, and post-processing. The Global Interpreter Lock (GIL) in Python permits only one thread to execute Python code at a time within a process. While the GIL can impact the performance of multithreaded applications, certain operations remain unaffected, such as I/O operations and calling external programs. To maximize performance with better scalability, we adopt multiple threads to concurrently handle various tasks, with several queues created to facilitate information exchange among these threads. Thread Description Downloader In most cases, we require 2 to 3 threads to concurrently pull jobs and download audio files, and efficiently feed the inference pipeline while preventing the download of excessive audio files. The actual number depends on the characteristics of the application and dataset, as well as network performance. It also performs the following pre-processing steps:1) Removal of bad audio files.2) Format conversion and re-sampling.3) Chunking very long audio into 15-minute clips.4) Metadata extraction (URL, file/clid ID, length). The pre-processed audio files and their corresponding metadata JSON files are stored in a shared folder. Simultaneously, the filenames of the JSON files are added to the transcribing queue. Caller It reads a JSON filename from the transcribing queue, retrieves the metadata by reading the corresponding file in the shared folder, and subsequently sends a synchronous request, including the audio filename, to the inference server. Upon receiving the response, it forwards the generated texts along with statistics to the reporting queue, while simultaneously sending the transcribed audio and JSON filenames to the cleaning queue. The simplicity of the caller is crucial as it directly influences the inference performance. Reporter The reporter, upon reading the reporting queue, manages post-processing tasks, including merging results and format conversion. Eventually, it uploads the generated assets and reports the job results. Multiple threads may be required if the post-processing is resource-intensive. Cleaner After reading the cleaning queue, the cleaner deletes the processed audio files and their corresponding JSON files from the shared folder. By running two processes to segregate GPU-bound tasks from I/O and CPU-bound tasks, and fetching and preparing the next audio clips concurrently and in advance while the current one is still being transcribed, eliminates any waiting period. After one audio clip is completed, the next is immediately ready for transcription. This approach not only reduces the overall processing time for batch jobs but also leads to even more significant cost savings. Single-Node Test using JupyterLab on SaladCloud Before deploying the application container image on a large scale in SaladCloud, we can build a specialized application image with JupyterLab and conduct the single-node test across various types of Salad nodes. With JupyterLab’s terminal, we can log into a container instance running on SaladCloud, gaining OS-level access. This enables us to conduct various tests and optimize the configurations and parameters of the model and application. These include: Analysis of single-node test using JupyterLab Based on our tests using JupyterLab, we found that the inference of Parakeet TDT 1.1B for audio files lasting

Inference Benchmark on Salad: Distil-Whisper Large V2 vs. Whisper Large V3 for Speech-to-text

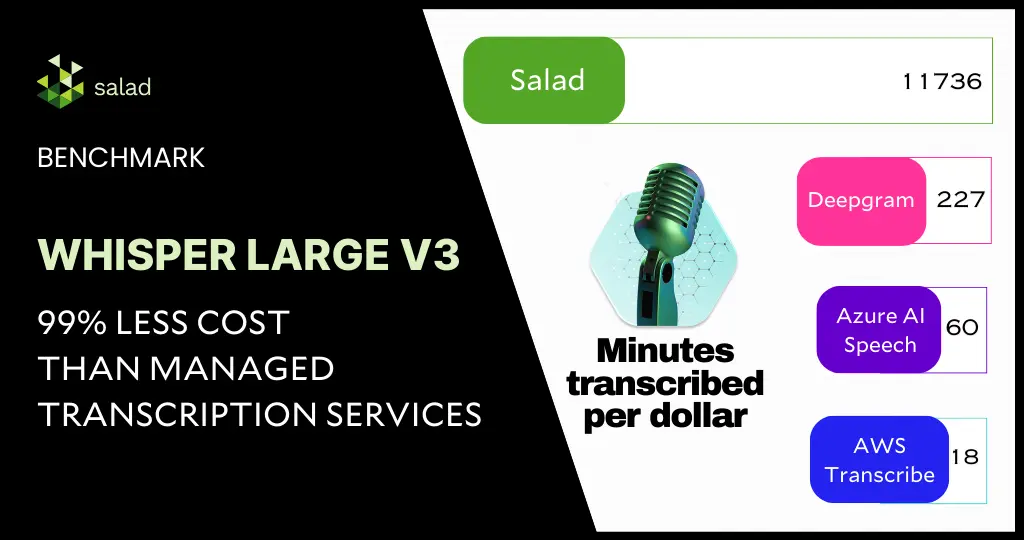

Hugging Face Distil-Whisper Large V2 is a distilled version of the OpenAI Whisper model that is 6 times faster, 49% smaller and performs within 1% WER (word error rates) on out-of-distribution evaluation sets. However, it is currently only available for English speech recognition. Building upon the insights from the previous inference benchmark of Whisper Large V3, we have conducted tests with Distill-Whisper Large V2 by using the same batch-processing infrastructure, against the same English CommonVoice and Spoken Wikipedia Corpus English (Part1, Part2) datasets. Let’s explore the distinctions between these two automatic speech recognition models in terms of cost and performance, and see which one better fits your needs. Batch-Processing Framework We used the same batch processing framework to test the two models, consisting of: Advanced Batch-Processing Architecture for Massive Transcription We aimed to adopt a decoupled architecture that provides the flexibility to choose the best and most cost-effective solution for each component from the industry. Simultaneously, we strived to keep the framework components fully managed and serverless to closely simulate the experience of using managed transcription services. Furthermore, two processes are employed in each node to segregate GPU-bound tasks from I/O and CPU-bound tasks, and fetching the next audio clips earlier while the current one is still being transcribed, allows us to eliminate any waiting period. After one audio clip is completed, the next is immediately ready for transcription. This approach not only reduces the overall processing time for batch jobs but also leads to even more significant cost savings. We also offer a data exploration tool – Jupyter notebook, designed to assist in preparing and analyzing inference benchmarks for various recognition models running on SaladCloud. Discover our open-source code for a deeper dive: Implementation of Inference and Benchmark Worker Docker Images Data Exploration Tool Massive Transcription Tests for Whisper Large on SaladCloud: We launched two container groups on Salad, with each one dedicated to one of the two models. Each group was configured with 100 replicas (2vCPU and 12 GB RAM with all GPU types with 8GB or more VRAM) in SaladCloud, and ran for approximately 10 hours. Here is the comparison: 10-hour Transcription with 100 Replicas in Salad Cloud Whisper Large V3 Distil-Whisper Large V2 Number of Transcribed Audio Files 2,364,838 3,846,559 Total Audio Length (s) 28,554,156 (8000 hours) 47,207,294 (13113 hours) Average Audio Length (s) 12 12 Cost on SaladCloud(GPU resource pool) Around $100100 Replicas (2vCPU,12GB RAM),20 GPU types actually used Around $100100 Replicas (2vCPU,12GB RAM), 22 GPU types actually used Cost on AWS and Cloudflare(Job queue/Recording system & cloud storage) Less than $10 Around $15 Most Cost-Effective GPU Type for transcribing long audio files exceeding 30 seconds RTX 3060 196 hours per dollar RTX 2080 Ti500 hours per dollar Most Cost-Effective GPU Type for transcribing short audio files lasting less than 30 seconds RTX 2080/3060/3060Ti/3070Ti47 hours per dollar RTX 2080/3060 Ti90 hours per dollar Best-Performing GPU Type for transcribing long audio files exceeding 30 seconds RTX 4080Average real-time factor: 40,transcribing 40 seconds of audio per second RTX 4090Average real-time factor: 93,transcribing 93 seconds of audio per second Best-Performing GPU Type for transcribing short audio files lasting less than 30 seconds RTX 3080Ti/4070Ti/4090Average real-time factor: 8,transcribing 8 seconds of audio per second RTX 4090Average real-time factor: 14,transcribing 14 seconds of audio per second System Throughput Transcribing 793 seconds of audio per second Transcribing 1311 seconds of audio per second Performance evaluation of the two Whisper Large models Different from those obtained in local tests with a few machines in a LAN, all these numbers are achieved in a global and distributed cloud environment that provides the transcription at a large scale, including the entire process from receiving requests to transcribing and sending the responses. Evidently, Distill-Whisper Large V2 outperforms Whisper Large V3 in both cost and performance. With a model size half that of Whisper, Distill-Whisper can run faster and allow for the utilization of a broader range of GPU types. This significant reduction in cost and improvement in performance are noteworthy outcomes. If the requirement is mainly for English speech recognition, Distill-Whisper is recommended. While Distil-Whisper boasts a sixfold increase in speed compared to Whisper, the real-world tests indicate that its system throughput is only 165% of that of Whisper. Similar to a car, its top speed depends on factors such as the engine, gears, chassis, and tires. Simply upgrading the engine to one that is 200% more powerful doesn’t assure a doubling of the maximum speed. In a global and distributed cloud environment offering services at a large scale, various factors can impact overall performance, including distance, transmission and processing delays, model size, and performance, among others. Hence, a comprehensive approach is essential for system design and implementation, encompassing use cases, business goals, resource configuration, and algorithms, among other considerations. Performance Comparison across Different Clouds Thanks to the open-source speech recognition model, Whisper Large V3, and the advanced batch-processing architecture harnessing hundreds of consumer GPUs on SaladCloud, we have already achieved a remarkable 500-fold cost reduction while retaining the same level of accuracy as other public cloud providers: $1 dollar can transcribe 11,736 minutes of audio (nearly 200 hours) with the most cost-effective GPU type. Distil-Whisper Large V2 propels us even further, delivering an incredible 1000-fold cost reduction for English speech recognition, compared to managed transcription services. This equates to $1 transcribing 29,994 minutes of audio, or nearly 500 hours. We believe these will fundamentally transform the speech recognition industry. SaladCloud: The Most Affordable GPU Cloud for Massive Audio Transcription For voice AI companies in pursuit of cost-effective and robust GPU solutions at scale, SaladCloud emerges as a game-changing solution. Boasting the market’s most competitive GPU prices, it tackles the issues of soaring cloud expenses and constrained GPU availability. In an era where cost-efficiency and performance take precedence, choosing the right tools and architecture can be transformative. Our recent Inference Benchmark of Whisper Large V3 and Distil-Whisper Large V2 exemplifies the savings and efficiency that can be achieved through innovative approaches. We encourage developers and startups

OpenVoice Text-to-Speech (TTS) Benchmark: 6 Million+ Words/$ Using Salad

What is OpenVoice? OpenVoice is an open-source, instant voice cloning technology that enables the creation of realistic and customizable speech from just a short audio clip of a reference speaker. Developed by MyShell.ai, OpenVoice stands out for its ability to precisely replicate the voice’s tone color while offering extensive control over various speech attributes such as emotion and rhythm. Remarkably, it also supports zero-shot cross-lingual voice cloning, enabling the generation of speech in languages not originally included in its extensive training set. OpenVoice is not only versatile but also exceptionally efficient, requiring significantly lower computational resources compared to commercially available text-to-speech (TTS) APIs, often at a fraction of the cost and with superior performance. For developers and organizations interested in exploring or integrating OpenVoice, the technical report and source code are available at arXiv and GitHub. The OpenVoice framework: An overview The OpenVoice technology encompasses a sophisticated framework designed to replicate human speech with remarkable accuracy and versatility. The process involves several key steps, each contributing to the creation of natural-sounding and personalized voice output. Here’s a closer look at the OpenVoice framework: Here is an illustration from the official technical report. Methodology of the OpenVoice Text-to-Speech (TTS) benchmark on SaladCloud GPUs For this initial benchmark, we have focused exclusively on the Text-to-Speech (TTS) component of OpenVoice, setting the stage for a more comprehensive analysis that will include full TTS and voice cloning in a future benchmark. Utilizing the default voice parameters with a speed setting of 1, our base text was a book “Robots and Empire” by Isaac Asimov, available via Archive.org totaling approximately 150,000 words. To manage memory efficiency and ensure seamless processing, we broke down and processed the text into chunks of roughly 30 sentences, or 200-300 words. Our evaluation spanned all consumer GPU classes available on SaladCloud, with each node provisioned with 1 vCPU and 8GB of RAM. To simulate a single-task environment typical of many production systems, we did not employ threading, thus each GPU was tasked with processing one chunk of text at a time. This setup provides insight into the raw processing power of each GPU class without the performance enhancements of parallel processing. The workflow involved downloading the text from Azure, processing it through the TTS component of OpenVoice, and then uploading the resulting audio back to Azure. This end-to-end process allowed us to assess not only the computational performance of each GPU but also the impact of network bandwidth and data transfer efficiencies. Benchmark findings: Cost-performance and inference speed Words per dollar efficiency For our first analysis, we only tracked GPU processing time without considering text download and audio upload time. The first plot revealed a clear leader in terms of cost-efficiency: the RTX 2070 GPU, which processed an impressive 6.6 million words per dollar, excluding the time for text download and audio upload. This metric is crucial for organizations that need to optimize their operating costs without compromising on output volume. The price of using RTX2070 on Salad cloud is $0.06/hour which together with vCPU and RAM we used got us to a total of $0.072/hour. Average words per second In terms of raw speed, the second plot shows that the RTX 3080 Ti topped the charts, achieving around 230.4 words per second. While the RTX 2070 lagged behind at approximately 132.7 words per second, its lower operational cost of $0.06 per hour compared to the RTX 3080 Ti’s $0.20 per hour makes it an attractive option for cost-conscious deployments. If you are interested in processing your text faster, RTX3080+ will be the best choice. Words per dollar including data transfer times The third plot introduced the reality of data transfer times, showcasing how the words-per-dollar metric shifts when including the time to download and upload data to and from Azure. In this scenario, the RTX 2070 remained efficient, processing 4.53 million words per dollar. This efficiency hints at further potential savings if data transfers are optimized, such as by processing data in parallel with downloads/uploads, which we did not include in our process. The Potential of Multithreading While this benchmark focused on single-threaded operations, it’s worth noting that the capacity for multithreading on more powerful GPUs like the RTX 3080 Ti could narrow the cost-performance gap. By processing multiple text chunks simultaneously, these GPUs could deliver even more words per dollar, adding a layer of strategic decision-making for organizations balancing speed and cost. Conclusion: Insights for TTS Deployment and Azure Comparison Through our benchmarking of the OpenVoice TTS component on Salad Cloud GPUs, we have identified the following: To put these findings in perspective, let’s compare them with the pricing structure of Azure’s Speech Services. Azure Speech Services offer various tiers and features in their pricing model. For standard text-to-speech (TTS) services, the price is $1 per hour for real-time processing and $0.36 per hour for batch processing. This pricing can increase with custom models and endpoint hosting, reaching up to $1.20 per hour plus additional costs for model hosting. There is also a per character pricing option in Azure which is $15 per 1 million characters for real-time and batch synthesis. In contrast, our benchmark with OpenVoice on Salad Cloud GPUs has demonstrated that the RTX 2070 can process an impressive 6.6 million words per dollar, excluding network transfer times. Even when including the time for text download and audio upload, the RTX 2070 achieves 4.53 million words per dollar. Given that the average English word is around 5 characters long, this means that, for the cost of processing 1 million characters on Azure, you could potentially process up to 300+ million characters using OpenVoice on Salad Cloud GPUs. Therefore, when considering factors such as budget constraints and processing speed requirements, OpenVoice on Salad Cloud GPUs emerges as a compelling alternative to managed services like Azure Speech Services. It offers not just a cost advantage but also the potential for greater customization and scalability – a powerful combination for businesses and developers looking to integrate advanced voice

Whisper Large V3 Speech Recognition Benchmark: 1 Million hours of audio transcription for just $5110

Save over 99.8% on audio transcription using Whisper Large V3 and consumer GPUs A 99.8% cost-savings for automatic speech recognition sounds unreal. But with the right choice of GPUs and models, this is very much possible and highlights the needless overspending on managed transcription services today. In this deep dive, we will benchmark the latest Whisper Large V3 model from Open AI for inference against the extensive English CommonVoice and Spoken Wikipedia Corpus English (Part1, Part2) datasets, delving into how we accomplished an exceptional 99.8% cost reduction compared to other public cloud providers. Building upon the inference benchmark of Whisper Large V2 and with our continued effort to enhance the system architecture and implementation for batch jobs, we have achieved substantial reductions in both audio transcription costs and time while maintaining the same level of accuracy as the managed transcription services. Behind The Scenes: Advanced System Architecture for Batch Jobs Our batch processing framework comprises of the following: We aimed to keep the framework components fully managed and serverless to closely simulate the experience of using managed transcription services. A decoupled architecture provides the flexibility to choose the best and most cost-effective solution for each component from the industry. Within each node in the GPU resource pool in SaladCloud, two processes are utilized following best practices: one dedicated to GPU inference and another focused on I/O and CPU-bound tasks, such as downloading/uploading, preprocessing, and post-processing. 1) Inference Process The inference process operates on a single thread. It begins by loading the Whisper Large V3 model, warming up the GPU, and then listens on a TCP port by running a Python/FastAPI app in a Unicorn server. Upon receiving a request, it calls the transcription inference and returns the generated assets. The chunking algorithm is configured for batch processing, where long audio files are segmented into 30-second clips, and these clips are simultaneously fed into the model. The batch inference significantly enhances performance by effectively leveraging the GPU cache and parallel processing capabilities. 2) Benchmark Worker Process The benchmark worker process primarily handles various I/O tasks, as well as pre- and post processing. Multiple threads are concurrently performing various tasks: one thread pulls jobs and downloads audio clips; another thread calls the inference, while the remaining threads manage tasks such as uploading generated assets, reporting job results and cleaning the environment, etc. Several queues are created to facilitate information exchange among these threads. Running two processes to segregate GPU-bound tasks from I/O and CPU-bound tasks, and fetching the next audio clips earlier while the current one is still being transcribed, allows us to eliminate any waiting period. After one audio clip is completed, the next is immediately ready for transcription. This approach not only reduces the overall processing time for batch jobs but also leads to even more significant cost savings. Deployment on SaladCloud We created a container group with 100 replicas (2 vCPU and 12 GB RAM with 20 different GPU types) in SaladCloud, and ran it for approximately 10 hours. In this period, we successfully transcribed over 2 million audio files, totalling nearly 8000 hours in length. The test incurred around $100 in SaladCloud costs and less than $10 on both AWS and Cloudflare. Results from the Whisper Large v3 benchmark Among the 20 GPU types, based on the current datasets, the RTX 3060 stands out as the most cost-effective GPU type for long audio files exceeding 30 seconds. Priced at $0.10 per hour on SaladCloud, it can transcribe nearly 200 hours of audio per dollar. For short audio files lasting less than 30 seconds, several GPU types exhibit similar performance, transcribing approximately 47 hours of audio per dollar. On the other hand, the RTX 4080 outperforms others as the best-performing GPU type for long audio files exceeding 30 seconds, boasting an average real-time factor of 40. This implies that the system can transcribe 40 seconds of audio per second. While for short audio files lasting less than 30 seconds, the best average real-time factor is approximately 8 by a couple of GPU types, indicating the ability to transcribe 8 seconds of audio in just 1 second. Analysis of the benchmark results Different from those obtained in local tests with several machines in a LAN, all these numbers are achieved in a global and distributed cloud environment that provides the transcription at a large scale, including the entire process from receiving requests to transcribing and sending the responses. There are various methods to optimize the results. Aiming for reduced costs, improved performance or even both, and different approaches may yield distinct outcomes. The Whisper models come in five configurations of varying model sizes: tiny, base, small, medium, and large(v1/v2/v3). The large versions are multilingual and offer better accuracy, but they demand more powerful GPUs and run relatively slowly. On the other hand, the smaller versions support only English with slightly lower accuracy, but it requires less powerful GPUs and runs very fast. Choosing more cost-effective GPU types in the resource pool will result in additional cost savings. If performance is the priority, selecting higher-performing GPU types is advisable, while still remaining significantly less expensive than managed transcription services. Additionally, audio length plays a crucial role in both performance and cost, and it’s essential to optimize the resource configuration based on your specific use cases and business goals. Discover our open-source code for a deeper dive: Implementation of Inference and Benchmark Worker Docker Images Data Exploration Tool Performance Comparison across Different Clouds The results indicate that AI transcription companies are massively overpaying for cloud today. With the open-source automatic speech recognition model – Whisper Large V3, and the advanced batch-processing architecture leveraging hundreds of consumer GPUs on SaladCloud, we can deliver transcription services at a massive scale and at an exceptionally low cost, while maintaining the same level of accuracy as managed transcription services. With the most cost-effective GPU type for Whisper Large V3 inference on SaladCloud, $1 dollar can transcribe 11,736 minutes of audio (nearly 200 hours), showcasing a 500-fold