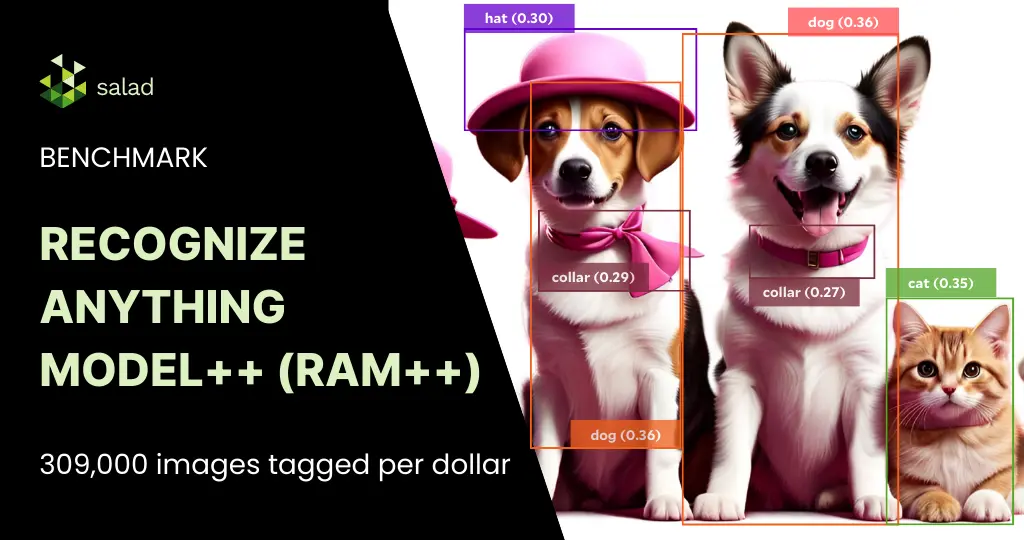

Tag 309K Images/$ with Recognize Anything Model++ (RAM++) On Consumer GPUs

What is the Recognize Anything Model++? The Recognize Anything Model++ (RAM++) is a state of the art image tagging foundational model released last year, with pre-trained model weights available on huggingface hub. It significantly outperforms other open models like CLIP and BLIP in both the scope of recognized categories and accuracy. But how much does it cost to run RAM++ on consumer GPUs? In this benchmark, we tag 144,485 images from the COCO 2017 and AVA image datasets, evaluating inference speed and cost-performance. The evaluation was done across 167 nodes on SaladCloud representing 19 different consumer GPU classes. To do this, we created a container group targeting a capacity of 100 nodes, with the “Stable Diffusion Compatible” GPU class. All nodes were assigned 2 vCPU and 8GB RAM. Here’s what we found. Up to 309k images tagged per dollar on RTX 2080 In keeping with a trend we often see here, the best cost-performance is coming from the lower end GPUs, RTX 20- and 30-series cards. In general, we find that the smallest/cheapest GPU that can do the job you need is likely to have the best cost-performance, in terms of inferences per dollar. RAM++ is a fairly small, lightweight model (3GB), and achieved its best performance on the RTX 2080, with just over 309k inferences per dollar. Average Inference Time Is <300ms Across All GPUs We see relatively quick inference times across all GPU types, but we also see a pretty wide distribution of performance, even within a single GPU type. Zooming in, we can see this wide distribution is also present within a single node. Further, we see no significant correlation between inference time and number of tags generated. GPU Correlation between inference time and number of tags RTX 2080 0.04255 RTX 2080 SUPER -0.02209 RTX 2080 Ti -0.03439 RTX 3060 0.00074 RTX 3060 Ti 0.00455 RTX 3070 0.00138 RTX 3070 Laptop GPU -0.00326 RTX 3070 Ti -0.01494 RTX 3080 -0.00041 RTX 3080 Laptop GPU -0.09197 RTX 3080 Ti 0.02748 RTX 3090 -0.00146 RTX 4060 0.03447 RTX 4060 Laptop GPU -0.08151 RTX 4060 Ti 0.04153 RTX 4070 0.01393 RTX 4070 Laptop GPU -0.05811 RTX 4070 Ti 0.00359 RTX 4080 0.02090 RTX 4090 -0.03002 Based on this, you should expect to see fairly wide variation in inference time in production regardless of your GPU selection or image properties. Results from the Recognize Anything Model++ (RAM++) benchmark Consumer GPUs offer a highly cost-effective solution for batch image tagging, coming in between 60x-300x the cost efficiency of managed services like Azure AI Computer Vision. The Recognize Anything paper and code repository offer guides to train and fine-tune this model on your own data, so even if you have unusual categories, you should consider RAM++ instead of commercially available managed services. Resources Shawn RushefskyShawn Rushefsky is a passionate technologist and systems thinker with deep experience across a number of stacks. As Generative AI Solutions Architect at Salad, Shawn designs resilient and scalable generative ai systems to run on our distributed GPU cloud. He is also the founder of Dreamup.ai, an AI image generation tool that donates 30% of its proceeds to artists.

Segment Anything Model (SAM) Benchmark: 50K Images/$ on Consumer GPUs

What is the Segment Anything Model (SAM)? The Segment Anything Model (SAM) is a foundational image segmentation model released by Meta AI Research last year, with pre-trained model weights available through the GitHub repository. It can be prompted with a point or a bounding box, and performs well on a variety of segmentation tasks. More importantly, it carries the permissive Apache 2.0 license, allowing commercial use. As companies deploy this model for use cases ranging from image labeling, background removal, inpainting and more, cost of running SAM in production is a primary concern. Benchmarking the Segment Anything Model (SAM) on Salad In this benchmark, we do an unprompted full-image segmentation on 152,848 images from the COCO 2017 and AVA image datasets. We evaluate inference speed and cost-performance across 302 nodes on SaladCloud representing 22 different consumer GPU classes. To do this, we created a container group targeting a capacity of 100 nodes, with the “Stable Diffusion Compatible” GPU class. All nodes were assigned 2 vCPU and 8GB RAM. Here’s what we found. 50K+ images segmented per dollar on RTX 3060 Ti & RTX 3070 Ti As is nearly always the case with smaller models, the best cost-performance is coming from the lower end GPUs, mostly the RTX 30-series cards. In this case, we see a significant bump in cost-performance on the Ti cards. This makes sense since they are priced the same as their non-Ti counterparts but have more CUDA cores. The stand-out performers here are the RTX 3060 Ti, and the RTX 3070 Ti, each offering at least 50k inferences per dollar. Inference time is fairly consistent within a particular node Zooming into performance within a single GPU class – the RTX 3070 Ti, we see that the bulk of inference times fall within a narrow range on any particular node, with some significant outliers. We do see some variability across different nodes, with one standing out as particularly bad. We often see a small amount of variability in performance across nodes on Salad, since each one is an individual residential gaming PC, with a variety of different CPUs, RAM speed, motherboard configurations, etc. Our one outlier node (31b6, circled above) is indicative of something anomalous with that machine. We’re always working to get better at detecting these scenarios before your workloads get to a bad machine. But the best practice is to monitor the performance of your application, and terminate nodes that display anomalous behavior. The range of inference time on one of our nodes (67acdb6b) may look concerning at first. But if we zoom in, we see those outlier times are exceedingly uncommon, with the vast majority of inferences clustered within a narrow range. And indeed, if we filter out the outliers, we see a much tighter grouping within each individual node. But we also start to see 2 distinct groupings of machines: It is a little concerning that some machines are 35-40% faster than others, so this gets sent to our engineering team for further investigation. The above cost-performance numbers include all these outliers and variability, so I suspect that it is possible to beat those numbers. Results from the Segment Anything Model (SAM) benchmark The RTX 3060 Ti and RTX 3070 Ti running the Segment Anything Model (SAM) offer a highly cost-effective solution for batch image segmentation, coming in at 50x the cost efficiency of managed services like Azure AI Computer Vision. Shawn RushefskyShawn Rushefsky is a passionate technologist and systems thinker with deep experience across a number of stacks. As Generative AI Solutions Architect at Salad, Shawn designs resilient and scalable generative ai systems to run on our distributed GPU cloud. He is also the founder of Dreamup.ai, an AI image generation tool that donates 30% of its proceeds to artists.

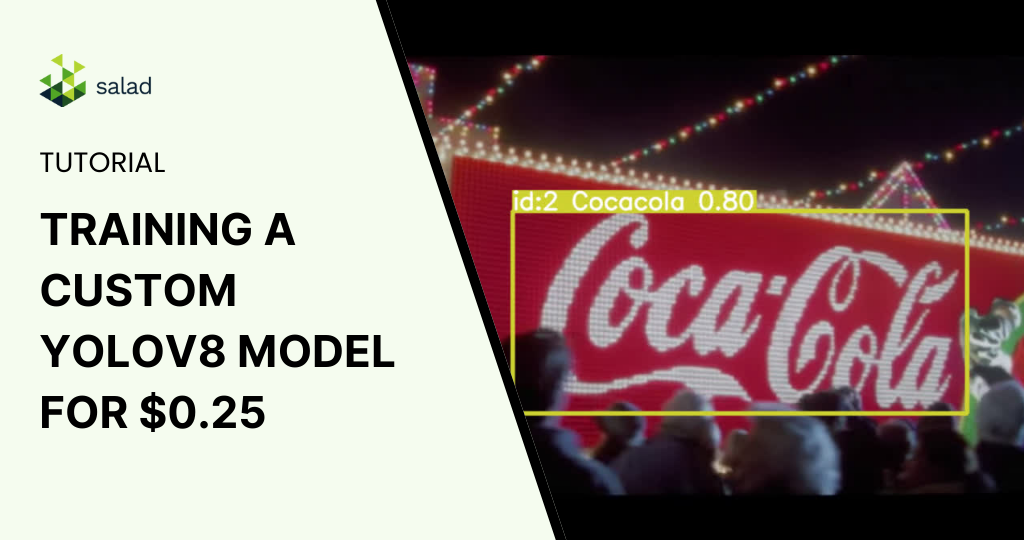

Training a custom YOLOv8 model on Salad for just $0.25

Training a Custom YOLOv8 Model for Logo Detection In the dynamic world of AI and machine learning, the ability to customize is immensely powerful. Our previous exploration delved into deploying a pre-trained YOLOv8 model using Salad’s cloud infrastructure, revealing 73% cost savings in real-time object tracking and analysis. Advancing this journey, we’re now focusing on training a customized YOLO (You Only Look Once) model using SaladCloud’s distributed infrastructure. In this training, we focused on processing times, cost efficiency, and model accuracy – things that are relevant to real-world use-case scenarios. Training custom models is notably more resource-intensive than running pre-trained ones. It demands substantial GPU power and time, translating into higher costs. This is especially true for deep learning models used in object detection, where numerous parameters are finetuned over extensive datasets. The process involves repeatedly processing large amounts of data, making heavy use of GPU resources forextended periods. Here are some of our considerations for this training: Dataset and Preparation For our testing, we decided to create a custom model that will be able to detect popular logos. Training Approach Salad’s Role in Streamlining Training Training Results Overview: Cost-Effectiveness and Performance of YOLOv8 Models As we delve into the world of custom model training, it’s crucial to evaluate both the financial and performance aspects of the models we train. Here, we provide a concise comparison of the YOLOv8 Nano, Small, and Medium models, highlighting their training duration and associated costs when trained on Salad Cloud, a platform celebrated for its efficiency and cost-effectiveness.First let’s check performance difference based on validation results: It seams like every next model is slightly better than the previous one. Let us check how long it took to train each model and how much spent using Salad cloud: Each model brings unique strengths to the table, with the Nano model offering speed and cost savings, while the Medium model showcases the best performance for more intensive applications. That is unbelievable that we got a performing custom detection model for only 25 cents. Bringing Custom Models to Life: Tracking Coca-Cola Labels With our custom-trained YOLO model in hand, we now want to test it in the real life. We will run a logo tracking experiment on the iconic Coca-Cola Christmas commercial. This real-world application illustrates the practical utility of our model in dynamic, visually-rich scenarios.For those eager to replicate this process or deploy their own models for similar tasks, detailed instructions are available in our previous article, which walks you through the steps of running inference on Salad’s cloud platform.Let’s now see the performance of our YOLO model in action, and witness how it keeps up with the holiday spirit, frame by frame: As a result we can see that we not only can use our custom trained model on images, but even on videos adding tracking possibilities. Conclusion: An Extremely Affordable Path to Custom Model Training By harnessing the power of SaladCloud, we managed to train three distinct YOLO models, each tailored to the same dataset and unified by consistent hyperparameters. The training took under an hour at the economical sum of 1 dollar. The culmination of this process is a robust model fine-tuned for real-world applications, remarkably realized at the modest expense of a quarter. This endeavor not only highlights the feasibility of developing custom AI solutions on a budget but also showcases the potential for such models to be rapidly deployed and iteratively improved in commercial and research settings.