Training a Custom YOLOv8 Model for Logo Detection

In the dynamic world of AI and machine learning, the ability to customize is immensely powerful. Our previous exploration delved into deploying a pre-trained YOLOv8 model using Salad’s cloud infrastructure, revealing 73% cost savings in real-time object tracking and analysis. Advancing this journey, we’re now focusing on training a customized YOLO (You Only Look Once) model using SaladCloud’s distributed infrastructure. In this training, we focused on processing times, cost efficiency, and model accuracy – things that are relevant to real-world use-case scenarios.

Training custom models is notably more resource-intensive than running pre-trained ones. It demands substantial GPU power and time, translating into higher costs. This is especially true for deep learning models used in object detection, where numerous parameters are fine-tuned over extensive datasets. The process involves repeatedly processing large amounts of data, making heavy use of GPU resources for

extended periods.

Here are some of our considerations for this training:

- Processing Times: We will monitor the duration taken by each model to train, providing insights into the efficiency of different YOLO configurations.

- Cost Analysis: Given that training is a resource-heavy task, understanding the financial implications is crucial. We will compare the costs of training each model on SaladCloud, offering a clear perspective on the budgetary requirements for such tasks.

- Model Accuracy: The ultimate test of any model is its performance. We will evaluate the accuracy of each trained model, understanding how the training complexity translates into detection precision.

Dataset and Preparation

For our testing, we decided to create a custom model that will be able to detect popular logos.

- Dataset: We utilized the “Flickr Logos 27” dataset, featuring 810 images across 27 classes including well-known brands like Adidas, Coca-Cola, Apple, and Google.

- Preparation: The dataset was formatted for YOLOv8 compatibility, with images and corresponding labels meticulously organized.

Training Approach

- Models Trained: We experimented with three YOLOv8 base models- Nano, Small, and Medium – each tailored to different performance needs.

- Hyperparameter Settings: For a consistent comparison, all models were trained with the same parameters:

- 50 epochs

- Batch size of 8

- Default image resolution of 640

- Seamless Resumption: YOLO’s ability to resume training from saved checkpoints ensured a continuous and efficient training process.

SaladCloud’s Role in Streamlining Training

- Cost and Time Efficiency: Salad’s cloud infrastructure significantly reduces the cost and time required for training complex models. To make our training process fast and sufficient, we used the RTX 4080 (16 GB) GPU for all our experiments, which was priced at a very low rate of $0.28 per hour on SaladCloud.

- Persistent Environment: By integrating SaladCloud with Azure File Shares, we established a robust environment for easy switching between training datasets and possibility of checking for existing weights for resuming interrupted trainings.

- Automated Processes: A specially designed bash script managed data synchronization and training processes, enhancing efficiency. If you want to dive deeper into this process, including the detailed steps and scripts, check our full guide and GitHub repository.

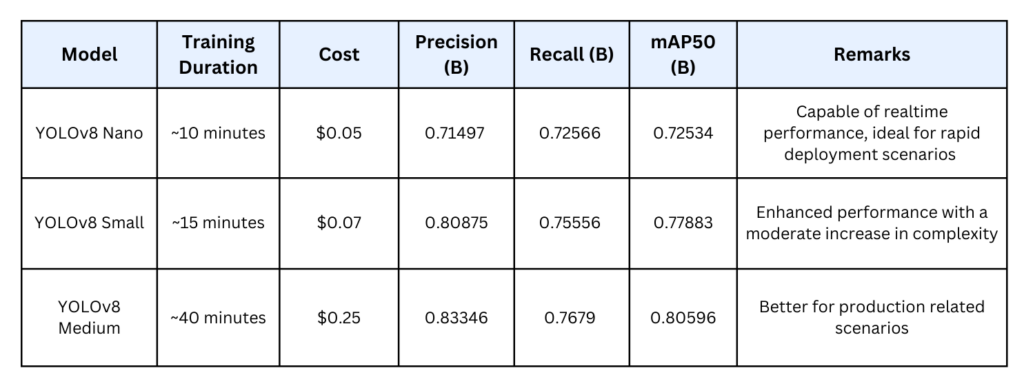

Training Results Overview: Cost-Effectiveness and Performance of YOLOv8 Models

As we delve into the world of custom model training, it’s crucial to evaluate both the financial and performance aspects of the models we train. Here, we provide a concise comparison of the YOLOv8 Nano, Small, and Medium models, highlighting their training duration and associated costs when trained on SaladCloud, a platform celebrated for its efficiency and cost-effectiveness.

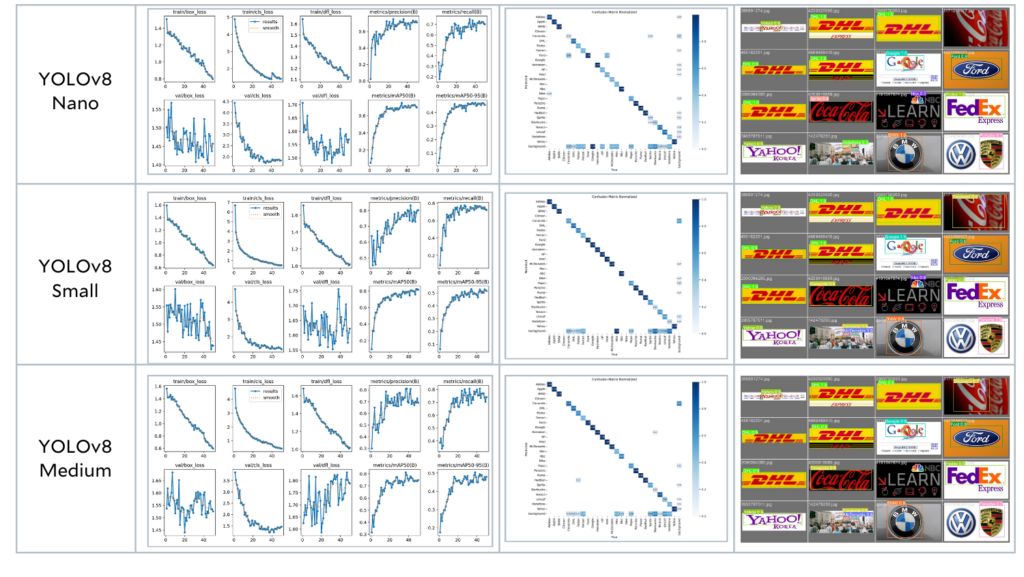

First, let’s check performance differences based on validation results:

It seems like every next model is slightly better than the previous one. Let us check how long it took to train each model and how much was spent using SaladCloud:

Each model brings unique strengths to the table, with the Nano model offering speed and cost savings, while the Medium model showcases the best performance for more intensive applications. That is unbelievable that we got a performing custom detection model for only 25 cents.

Bringing Custom Models to Life: Tracking Coca-Cola Labels

With our custom-trained YOLO model in hand, we now want to test it in the real life. We will run a logo tracking experiment on the iconic Coca-Cola Christmas commercial. This real-world application illustrates the practical utility of our model in dynamic, visually-rich scenarios.

For those eager to replicate this process or deploy their own models for similar tasks, detailed instructions are available in our previous article, which walks you through the steps of running inference on Salad’s cloud platform.

Let’s now see the performance of our YOLO model in action and witness how it keeps up with the holiday spirit, frame by frame:

As a result we can see that we not only can use our custom trained model on images, but even on videos adding tracking possibilities.

Conclusion: An Extremely Affordable Path to Custom Model Training

By harnessing the power of SaladCloud, we managed to train three distinct YOLO models, each tailored to the same dataset and unified by consistent hyperparameters. The training took under an hour at the economical sum of 1 dollar. The culmination of this process is a robust model fine-tuned for real-world applications, remarkably realized at the modest expense of a quarter. This endeavor not only highlights the feasibility of developing custom AI solutions on a budget but also showcases the potential for such models to be rapidly deployed and iteratively improved in commercial and research settings.