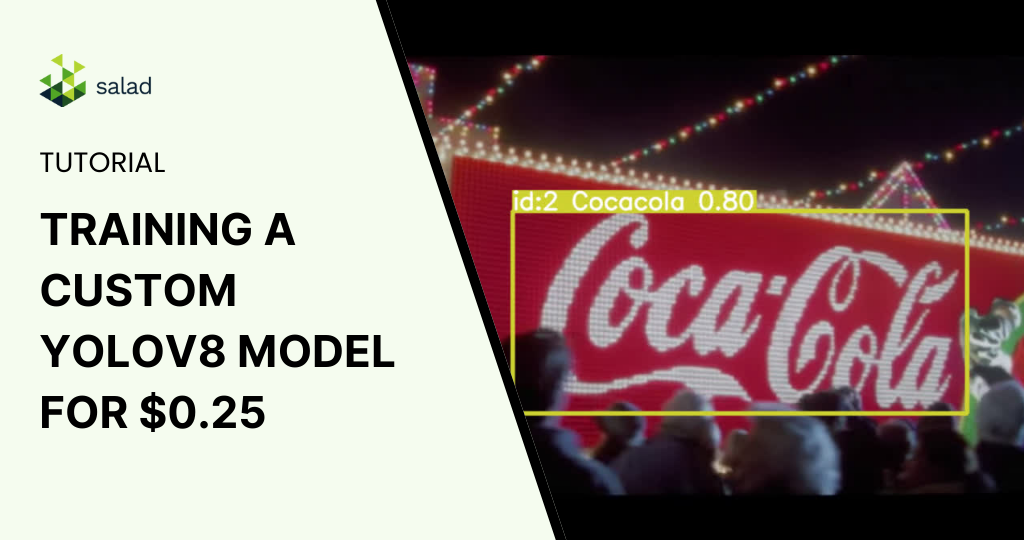

Training a custom YOLOv8 model on Salad for just $0.25

Training a Custom YOLOv8 Model for Logo Detection In the dynamic world of AI and machine learning, the ability to customize is immensely powerful. Our previous exploration delved into deploying a pre-trained YOLOv8 model using Salad’s cloud infrastructure, revealing 73% cost savings in real-time object tracking and analysis. Advancing this journey, we’re now focusing on training a customized YOLO (You Only Look Once) model using SaladCloud’s distributed infrastructure. In this training, we focused on processing times, cost efficiency, and model accuracy – things that are relevant to real-world use-case scenarios. Training custom models is notably more resource-intensive than running pre-trained ones. It demands substantial GPU power and time, translating into higher costs. This is especially true for deep learning models used in object detection, where numerous parameters are finetuned over extensive datasets. The process involves repeatedly processing large amounts of data, making heavy use of GPU resources forextended periods. Here are some of our considerations for this training: Dataset and Preparation For our testing, we decided to create a custom model that will be able to detect popular logos. Training Approach Salad’s Role in Streamlining Training Training Results Overview: Cost-Effectiveness and Performance of YOLOv8 Models As we delve into the world of custom model training, it’s crucial to evaluate both the financial and performance aspects of the models we train. Here, we provide a concise comparison of the YOLOv8 Nano, Small, and Medium models, highlighting their training duration and associated costs when trained on Salad Cloud, a platform celebrated for its efficiency and cost-effectiveness.First let’s check performance difference based on validation results: It seams like every next model is slightly better than the previous one. Let us check how long it took to train each model and how much spent using Salad cloud: Each model brings unique strengths to the table, with the Nano model offering speed and cost savings, while the Medium model showcases the best performance for more intensive applications. That is unbelievable that we got a performing custom detection model for only 25 cents. Bringing Custom Models to Life: Tracking Coca-Cola Labels With our custom-trained YOLO model in hand, we now want to test it in the real life. We will run a logo tracking experiment on the iconic Coca-Cola Christmas commercial. This real-world application illustrates the practical utility of our model in dynamic, visually-rich scenarios.For those eager to replicate this process or deploy their own models for similar tasks, detailed instructions are available in our previous article, which walks you through the steps of running inference on Salad’s cloud platform.Let’s now see the performance of our YOLO model in action, and witness how it keeps up with the holiday spirit, frame by frame: As a result we can see that we not only can use our custom trained model on images, but even on videos adding tracking possibilities. Conclusion: An Extremely Affordable Path to Custom Model Training By harnessing the power of SaladCloud, we managed to train three distinct YOLO models, each tailored to the same dataset and unified by consistent hyperparameters. The training took under an hour at the economical sum of 1 dollar. The culmination of this process is a robust model fine-tuned for real-world applications, remarkably realized at the modest expense of a quarter. This endeavor not only highlights the feasibility of developing custom AI solutions on a budget but also showcases the potential for such models to be rapidly deployed and iteratively improved in commercial and research settings.

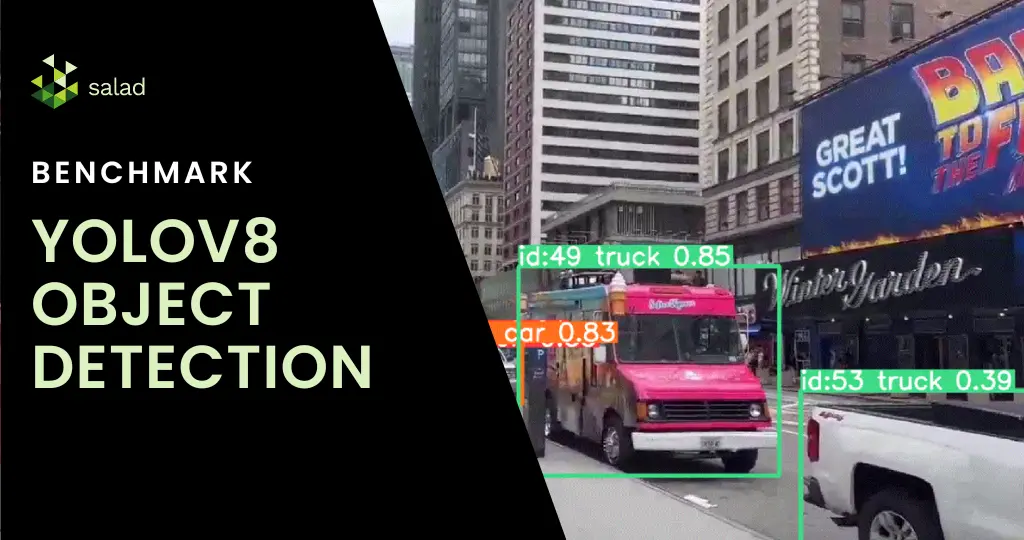

YOLOv8 Benchmark: Object Detection on Salad’s GPUs (73% Cheaper Than Azure)

What is YOLOv8? In the fast-evolving world of AI, object detection has made remarkable strides, epitomized by YOLOv8. YOLO (You Only Look Once) is an object detection and image segmentation model launched in 2015 and YOLOv8s is the latest version, developed by Ultralytics. The algorithm is not just about recognizing objects; it’s about doing so in real-time with unparalleled precision and speed. From monitoring fast-paced sports events to overseeing production lines, YOLOv8 is transforming how we see and interact with moving images. With features like spatial attention, feature fusion and context aggregation modules, YOLOv8 is being used extensively in agriculture, healthcare, manufacturing among others. In this YOLOv8 benchmark, we compare the cost of running YOLO on Salad and Azure. Running object detection on SaladCloud’s GPUs: A fantastic combination YOLOv8 can be run on GPUs, as long as they have enough memory and support CUDA. But with the GPU shortage and high cost, you need GPUs rented at affordable prices to make the economics work. SaladCloud’s network of 10,000+ Nvidia consumer GPUs has the lowest prices in the market and are a perfect fit for YOLOv8. Deploying YOLOv8 on SaladCloud democratizes high-end object detection, offering it on a scalable, cost-effective cloud platform for mainstream use. With GPUs starting at $0.02/hour, Salad offers businesses and developers an affordable, scalable solution for sophisticated object detection at scale. A deep dive into live stream video analysis with YOLOv8 This benchmark harnesses YOLOv8 to analyze not only pre-recorded but also live video streams. The process begins by capturing a live stream link, followed by real-time object detection and tracking. Using GPU’s on Saladcloud, we can process each video frame in less then 10 milliseconds, which is 10 times faster then using a CPU. Each frame’s data is meticulously compiled, yielding a detailed dataset that provides timestamps, classifications, and other critical metadata. As a result we get a nice summary of all the objects being present on our video: How to run YOLOv8 on SaladCloud’s GPUs We introduced a FastAPI with a dual role: it processes video streams in real-time and offers interactive documentation via Swagger UI. You can process live streams from Youtube, RTSP, RTMP, TCP as well as a regular videos. All the results will be saved in an Azure storage account you specify. All you need to do is send an API call with the video link, check if the video is a live stream or not, storage account information and timeframes of how often you want to save the results. We also integrated multithreading capabilities, allowing multiple video streams to be processed simultaneously. Deploying on SaladCloud In our step by step guide, you can go through the full deployment journey on Salad Cloud. We configured container groups, set up efficient networking, and ensured secure access. Deploying the FastAPI application on Salad proved to be not just technically feasible but also cost effective, highlighting the platform’s efficiency. Price comparison: Processing live streams and videos on Azure and Salad When it comes to deploying object detection models, especially for tasks like processing live streams and videos, understanding the cost implications of different cloud services is crucial. Let’s do some price comparison for our live stream object detection project: Context and Considerations Live Stream Processing: Live streams are unique in that they can only be processed as the data is received. Even with the best GPUs, the processing is limited to the current feed rate. Azure’s Real-Time Endpoint: We assume the use of an ML Studio real-time endpoint in Azure for a fair comparison. This setup aligns with a synchronous process that doesn’t require a full dedicated VM. Azure Pricing Overview We will now compare the compute prices in Azure and Salad. Note that in Azure you can not pick RAM, vCpu and GPU memory separately. You can only pick preconfigured computes. With Salad, you can pick exactly what you need. Lowest GPU Compute in Azure: For our price comparison, we’ll start by looking at Azure’s lowest GPU compute price, keeping in mind the closest model to our solution is YOLOv5. 1. Processing a Live Stream Service Configuration Cost per hour Remarks Azure 4 core, 16GB RAM (No GPU) $0.19 General purpose compute, no dedicated GPU Salad 4 vCores, 16GB RAM $0.032 Equivalent to Azure’s general compute Percentage Cost Difference for General Compute Salad is approximately 83% cheaper than Azure for general compute configurations. 2. Processing with GPU Support. This is the GPU Azure recommends for yolov5. Service Configuration Cost per hour Remarks Azure NC16as_T4_v3 (16 vCPU, 110GB RAM, 1 GPU) $1.20 Recommended for YOLOv5 Salad Equivalent GPU Configuration $0.326 Salad’s equivalent GPU offering Percentage Cost Difference for GPU Compute Salad is approximately 73% cheaper than Azure for similar GPU configurations. YOLOv8 deployment on GPUs in just a few clicks You can deploy YOLOv8 in production on SaladCloud’s GPUs in just a few clicks. Simply download the code from our GitHub repository or pull our ready-to-deploy Docker container from the Salad Portal. It’s as straightforward as it sounds – download, deploy, and you’re on your way to exploring the capabilities of YOLOv8 in real-world scenarios. Check out SaladCloud documentation for quick guides on how to start using our batch or synchronous solutions. Check out our step-by-step guide To get a comprehensive step-by-step guide of how to deploy YOLOv8 on SaladCloud, check out our step-by-step guide here. In this guide, we will show: This process is fully customizable to your needs. Follow along, make modifications, and experiment to your heart’s content. Our guide is designed to be flexible, allowing you to adjust and enhance the deployment of YOLOv8 according to your project requirements or curiosity. We are excited about the potential enhancements and extensions of this project. Future considerations include broadening cloud integrations, delving into custom model training, and exploring batch processing capabilities.