Comparing Price-Performance of 22 GPUs for AI Image Tagging (GTX vs RTX)

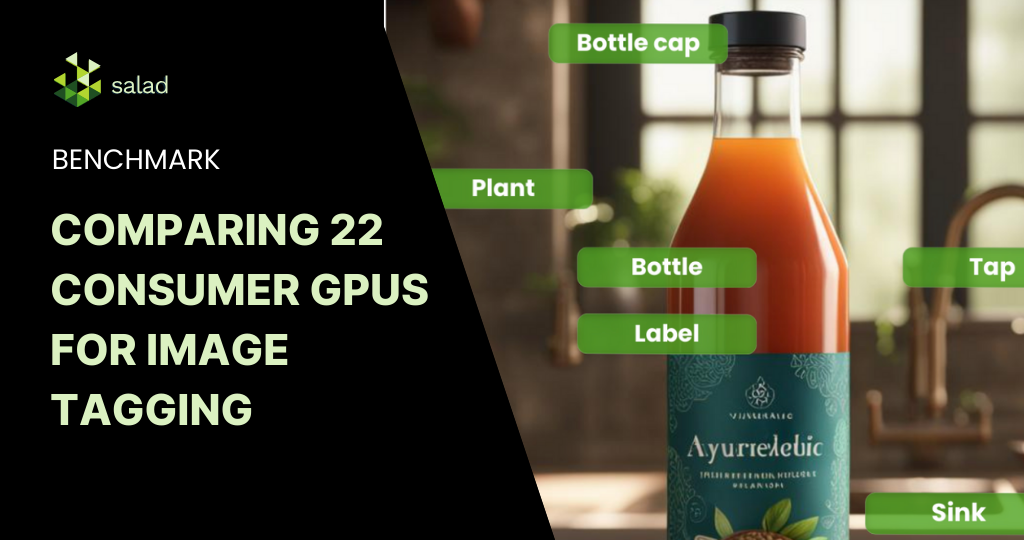

Older Consumer GPUs: A Perfect-Fit for AI Image Tagging In the current AI boom, there’s a palpable excitement around sophisticated image generation models like Stable Diffusion XL (SDXL) and the cutting-edge GPUs that power them. These models often require more powerful GPUs with larger amounts of vRAM. However, while the industry is abuzz with these advancements, we shouldn’t overlook the potential of older GPUs, especially for tasks like image tagging and search embedding generation. These processes, employed by image generation platforms like Civit.ai and Midjourney, play a crucial role in enhancing search capabilities and overall user experience. We leveraged Salad’s distributed GPU cloud to evaluate the cost-performance of this task across a wide range of hardware configurations. What is AI Image Tagging? AI image tagging is a technology that can automatically identify and label the content of images, such as objects, people, places, colors, and more. This helps users to organize, search, and discover their images more easily and efficiently. AI image tagging works: AI image tagging can be used for various purposes and applications, such as: Benchmarking 22 Consumer-Grade GPUs for AI Image Tagging In designing the benchmark, our primary objective was to ensure a comprehensive and unbiased evaluation. We selected a range of GPUs on SaladCloud, starting from the GTX 1050 and extending up to the RTX 4090, to capture a broad spectrum of performance capabilities. Each node in our setup was equipped with 16 vCPUs and 7 GB of RAM, ensuring a standardized environment for all tests. For the datasets, we chose two prominent collections from Kaggle: the AVA Aesthetic Visual Assessment and the COCO 2017 Dataset. These datasets offer a mix of aesthetic visuals and diverse object categories, providing a robust testbed for our image tagging and search embedding generation tasks. We used ConvNextV2 Tagger V2 to generate tags and ratings for images, and CLIP to generate embedding vectors. The tagger model used the ONNX runtime, while CLIP used Transformers with PyTorch. ONNX’s GPU capabilities are not a great fit for Salad, because of inconsistent Nvidia driver versions across the network, so we chose to go with the CPU runtime and to allocate 16 vCPUs for each node. PyTorch with Transformers works quite well across a large range of GPUs and driver versions with no additional configuration, so CLIP was run on GPU. Benchmark Results: GTX 1650 is the Surprising Winner As expected, our nodes with higher end GPUs took less time per image, with the flagship RTX 4090 offering the best performance. What is interesting, though, is that the median time per image is actually very similar for the GTX 1650 and the RTX 4090: 1 second. The best-case and worst-case performance of the 4090 is notably better. Weighting our findings by cost, we can confirm our intuition that the 1650 is a much better value at $0.02/hr than is the 4090 at $0.30/hr. While the older GPUs like the GTX 1650 have worse absolute performance compared to the RTX 4090, the great difference in price causes the older GPUs to be the best value, as long as your use case can withstand the additional latency. In fact, we see all GTX series GPUs outperforming all RTX GPUs in terms of images tagged per dollar. GTX Series: The Cost-Effective Option for AI Image Tagging with 3x More Images Tagged per Dollar In the ever-advancing realm of AI and GPU technology, the allure of the latest hardware often overshadows the nuanced decisions that drive optimal performance. Our analysis not only emphasizes the balance between raw performance and cost-effectiveness but also resonates with broader cloud best practices. Just as it’s pivotal not to oversubscribe to compute resources in cloud environments, it’s equally essential to avoid overcommitting to high-end GPUs when more cost-effective options can meet the requirements. The GTX 1650’s value proposition, especially for tasks with flexible latency needs, serves as a testament to this principle, delivering 3x as many images tagged per dollar as the RTX 4090. As we navigate the expanding AI applications landscape, making judicious hardware choices based on comprehensive real-world benchmarks becomes paramount. It’s a reminder that the goal isn’t always about harnessing the most powerful tools, but rather the most appropriate ones for the task and budget at hand. Run Your Image Tagging on Salad’s Distributed Cloud If you are running AI image tagging or any AI inference at scale, Salad’s distributed cloud has 10,000+ GPUs at the lowest price in the market. Sign up for a demo with our team to discuss your specific use case. Shawn RushefskyShawn Rushefsky is a passionate technologist and systems thinker with deep experience across a number of stacks. As Generative AI Solutions Architect at Salad, Shawn designs resilient and scalable generative ai systems to run on our distributed GPU cloud. He is also the founder of Dreamup.ai, an AI image generation tool that donates 30% of its proceeds to artists.