Engineers from Numenta used Salad Container Engine (SCE) to benchmark a first-of-its-kind intelligent computing platform that optimizes BERT transformer networks. Learn how Numenta attained 10x more inferences per dollar on SCE.

Challenge

Optimizing AI Systems

Deploying practical artificial intelligence applications at scale requires the distribution of large data sets to complex networks of specialized hardware. Though deep neural networks have facilitated significant advancements, their fundamental reliance on highly available processing resources and their tendency toward rapid expansion make it costly and inefficient to run transformers in the public cloud.

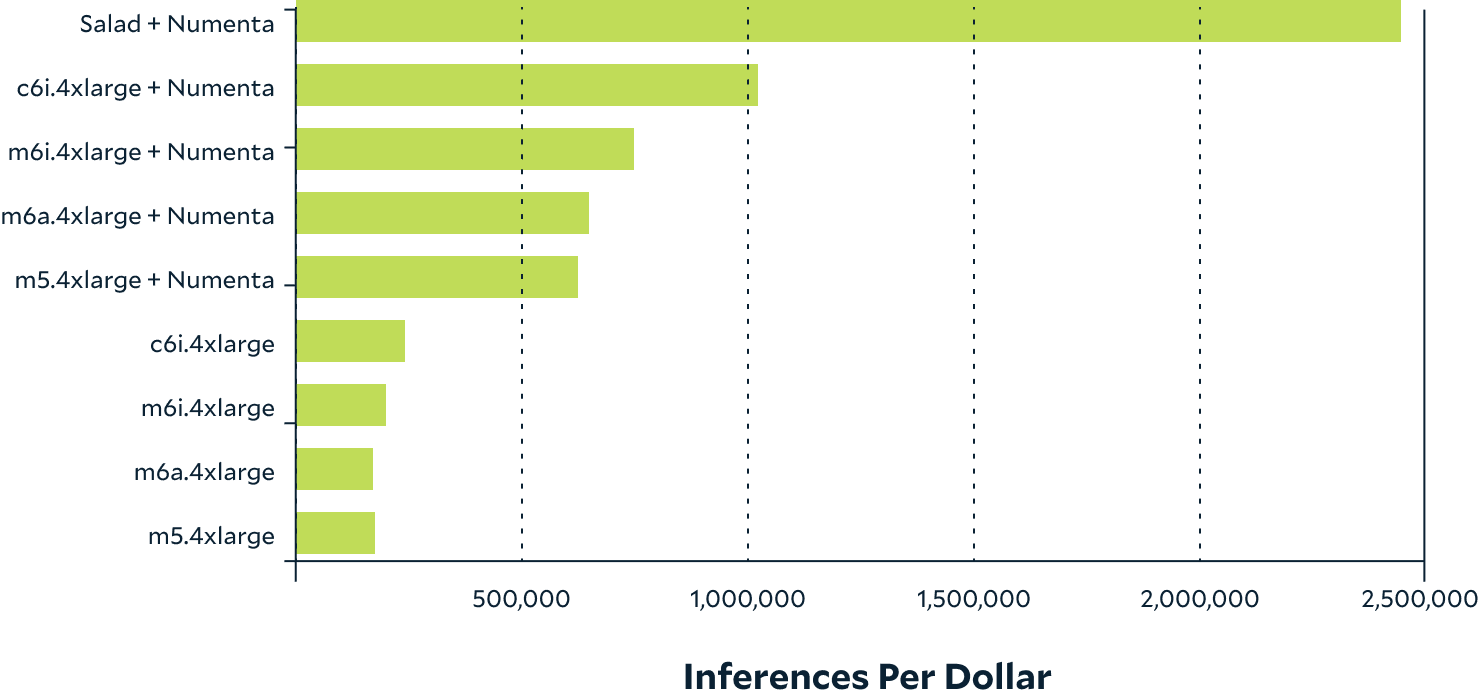

Price-Performance Comparison

Solution

Optimizing AI Systems

Leveraging insights from 20 years of neuroscience research, Numenta has developed breakthrough advances in AI that deliver dramatic performance improvements across broad use cases.

Grounded in the sensorimotor framework of intelligence elaborated by co-founder Jeff Hawkins in A Thousand Brains, Numenta’s innovative technology turns the principles of human learning into new architectures, data structures, and algorithms that deliver disruptive performance improvements.

Case Study

10x Price Performance

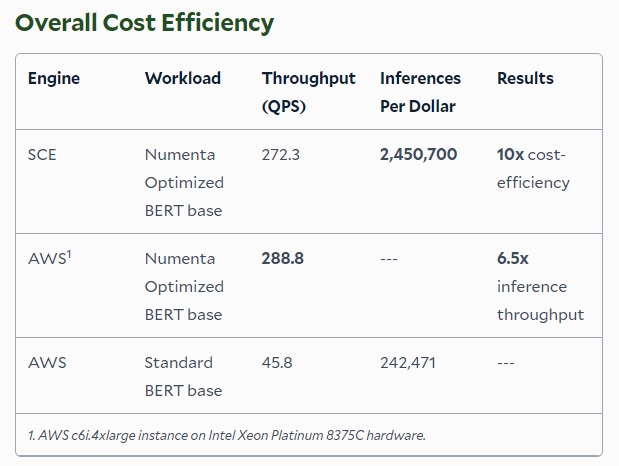

In a side-by-side comparison, Numenta’s optimized BERT technologies improved the throughput of a standard transformer network by up to 6.5x.

When deployed on SCE, Numenta attained 10x more inferences per dollar than possible with on-demand offerings from AWS—and managed to beat the cost efficiency of the nearest spot-basis instance by 2.39x.

About Numenta

Numenta has developed new artificial intelligence technologies that deliver breakthrough performance in AI/ML applications such as natural language processing and computer vision. Backed by two decades of neuroscience research, Numenta’s novel architectures, data structures, and algorithms deliver disruptive performance improvements. Numenta is currently engaged in a private beta with several Global 100 companies and startups to apply its platform technology across the full spectrum of AI, from model development to deployment—and ultimately enable novel hardware architectures and whole new categories of applications.